Code editors and code writers produce more production code than ever before. They accelerate delivery, but most of that code contains security bugs that slip through the gap in the quick review process. Organizations adore quicker builds, but they are trading it for an insidious, scale-melting threat: a gazillion little bugs that get scaled project to project, team to team. That breeds an army of “junior” code contributions polite on the surface, lethal at depth.

AI speeds up coding, but also spawns an army of hidden bugs. (Image Source: Cybersecurity Dive)

What’s New Today

Developers program today in bits that are written as first drafts. Paste, polish and ship is the team’s game. Tools fit drudge, boilerplate, and prototyping. But security teams and researchers write that nearly all of the code programmed has security flaws that a human tester will miss or would prefer someone else to find. It is not speculation: industry studies today point to long-term insecurity across hundreds of models and tasks.

Because these weapons make code in bulk and quantity, the impact is equivalent to a wave of new programmers an “army of juniors” whose individual efforts add up to the attack surface. Brain-dead patterns get amplified in the blink of an eye: one buggy piece copied and pasted into scores of repos becomes a fountain of copy-pasted vulnerability. (arxiv)

Evidence: How Big Is The Problem?

Independent and scholarly studies also report disheartening trends. A recent industry comparison of several more modern code models on a suite of off-the-shelf tests shows that nearly half of the code produced introduces security flaws into typical development environments. The same survey identifies some failure modes insecure input processing, bad crypto, and susceptibility to injection flaws, that occur across models and programming languages.

Further empirical research that evaluated code completions from top-performing assistants discovered a non-trivial number of completions to have well-documented security issues. The experiments conclude that the behavior is neither vendor-sensitive nor model class-sensitive.

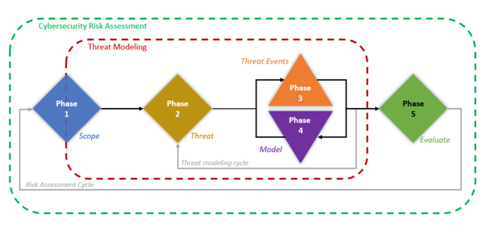

Security threats are not an isolated bug, warn policy experts. They divide threats into three: models that produce insecure code, model tampering or poisoning, and downstream feedback loops where vulnerable code re-trains downstream models. Every channel propagates systemic risk differently.

How The Risk Unfolds In The Real World

Some specific behaviors translate model vulnerabilities into business events:

- Copy-and-paste with no threat modeling: Snippets are being published by repositories without offering context-aware verification. Good enough for a toy example isn’t resilient to concurrent use, malicious inputs, or high-privilege execution.

- Over-dependence on tooling in place of capability: There is certainly code help for new staff members who “just need to know what to do.” Instruction assuming the same amount of mistakes as an apprentice in exchange for speed overlooks errors, accuracy, quantity and type. Researchers have concluded that iterative poking is more likely to compound issues than solve them.

- Automated spreading: Code pieces infect packages and forks, as well as repositories. Dozens of platforms share the same blind spot when malicious patterns get templated.

- Evil exploitation: Malware authors toy with code-generation systems for malicious purposes or to point to malicious code. Platform vendors have witnessed efforts to coerce models into generating dangerous scripts or code advantage exploitation. That trend raises the stakes for security controls over model access. (arxiv)

AI tools speed coding and spread bugs just as fast. (Image Source: Conquer your risk)

AI tools speed coding and spread bugs just as fast. (Image Source: Conquer your risk)

Who Gets Hit

The blowback condenses into short-term and systemic weakening:

- Reputation and time capital are spent repairing inherited fault lines.

- Dev teams. There is cognitive overhead for it. Engineers must audit and fix more surface area than previously, with delivery tightness at speed addressed.

- Security teams. There is triage difficulty for analysts when being offered a tsunami of fresh vulnerabilities showing up over multiple repositories, stacks, and languages.

- The broader ecosystem. Compromised libraries or services are downstream supply-chain attack surfaces that victimize consumers and users.

The Attack Surface Is Evolving

The code written creates two dimensions of risk:

- More and more code surfaces, quicker. Even with the insecure output ratio fixed, raw numbers skyrocket.

- Fooled patterns written become the same vulnerabilities in otherwise unique projects. Repeat, foreseeable attacks are bulk commoditized by attackers.

Both impacts are attested by industry research and studies: similar patterns of mistakes are repeated by models, and they are spread by copied code, and hence expose systems to risk.

New Industry Responses

Organizations react on numerous fronts, aligning engineering, process and governance.

- Security pipelines. Companies add more invasive static analysis, dependency scans, and runtime protection to CI/CD pipelines. A scan is made a prerequisite for the merge. This removes risk but doesn’t yet catch semantic bugs that static tools can’t find.

- Human-in-the-loop controls. Companies make any code built for shipping pass through approval by a senior reviewer before shipping to production. This is slower but enforces stricter standards on what ships.

- Model governance. Inside companies, they define internal policy that dictates permitted tools, which dictate practice and use cases supported. They also check tool telemetry for non-trusted output.

- Trust boundaries are imposed. Teams trust other people’s code than developers themselves, first putting it in a place in staging environment, stamping it for further testing, and inserting extra runtime checks.

Each approach costs and involves friction in exchange for risk. There is a trade-off within an organization: where do managers invest in resilience or velocity?

the economic reality is uglier than anyone’s saying:

AI tools are already doing junior developer work. boilerplate generation, documentation, test cases.

the paper documents this across multiple studies.

which means the job market isn’t “adapting”… it’s bifurcating.… pic.twitter.com/zlZmnH8jSG

— Alex Prompter (@alex_prompter) October 26, 2025

A Cautionary Example

Suppose, for example, there is a product team that wants to ship a payment feature. A developer outsources a freelancer to implement payment callback boilerplate and input sanitization. The code snippet appears adequate, lints well, and local tests pass. It does poor escaping, however, and includes a default error-handling route that logs out sensitive tokens. The function has been rolled out. Weeks following the release of the function, attackers use the weak session-fixation route and pilfer keys.

Then imagine the same pattern cloned into five other microservices. Time-to-exploit crashes to the floor because the attacker would have one functional exploit with which to attack multiple services. It is not some overhyped threat; post-mortems of incidents increasingly reveal minute implementation details, when cloned, cause havoc at scale.

Short-Term Fixes All Teams Should Apply

- Treat generated code as untrusted. Apply threat model and code review on all generated code that involves high-risk activity.

- Bake in security scans at CI and require remediation before merge. Static tools will pick up most kinds of common errors but not all.

- Implement an all-encompassing policy on what constitutes valid use of assistants and share best-practice prompts that expose security limits.

- Don’t allow unknown code to disappear. Go investigate where things are originating. Look at which commits or PRs authored code and who approved them.

- Invest in training developers in the right ways instead of waiting for tooling to rescue you.

These mitigate risk but never eliminate it. These provide time to develop better detection and governance.

Trust no AI code: scan, review, train, repeat (Image Source: Medium)

Training Case Studies

Tiny Failure, Large Ripple

A mid-sized payments firm uses a generated helper to sanitize input through a pipeline of microservices. Sample code presumes UTF-8 and removes a subset of control characters but croaks under edge-case encodings used by some international partners. Attacker builds payloads that evade sanitization and strike downstream parsers. Cost is rollback, data-handling rule-violation fees, and a few months of repair work. The moral: a code presumption is systemic when code is in rapid motion.

Tooling Fatigue At Scale

A cloud vendor rolls out code-completion capability to scores of engineers. Early adoption contributes sprint number but with a corresponding rise in dependency drift and calling upon legacy capability. The firm closed its CI gates but programmers encountered impedance. The solution: the vendor has staged rollout of generation capabilities and synchronizes them with developer clinics for safe patterns. Moral: government must be pragmatic and reinforced with training as opposed to principles.

A Supply-Chain Cascade

An open-source library consists of contributed pieces of code by code assistants. Downstream packages are a collection that makes use of the library. A copied, mis-escaped pattern is discovered by an attacker and an exploit is developed that affects many projects. Remediation is coordinated disclosure and cross-repository patching. Moral of the story: open-source ecosystems amplify risk and require centralized leadership and coordination velocity.

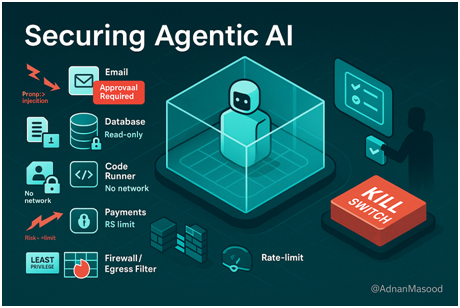

Governance Frameworks That Work

- Use A Multi-Level Model Of Trust: Not every bit of code is created equal. Categorize code into three levels: experimental (sandboxed, no prod access), trusted (senior-reviewed), and critical (expert-written or senior-reviewed only). Apply repository and CI policy to these levels such that policy enforcement is mechanistic and unambiguous.

- Enforce Accountability And Provenance: Log provenance on each snippet generated: tool, prompt, who approved and when. Bury that metadata away from commits and expose it for querying. In times of issue, provenance speeds up triage and attributability. Responsibility deters lazy copy-and-paste.

- Have a Security Charter For Deploying Models: Organisations benefit from having an explicit charter explicitly stating permissible use cases, prohibited generation classes (auth, cryptography, or bare-metal infrastructure scripts), permitted prompts and sign-offs. Post the charter publicly in the organisation and re-certify every quarter.

- Invest In Human Capital: Invest in developer upskilling, not tooling. Host secure-coding bootcamps that remove failure modes introduced by generated code. Pair junior architects with security champions for high-severity merges.

- Establish A Cross-Functional Review Board: For borderline sensitive projects, request a rapid review by an ad hoc security, privacy, and product team. Provide veto or call-back authority to the board for mitigation. Make it lightweight so it will not be a bottleneck.

Technical Controls That Scale

- Shift-left With Smart Checks. Bake safety tests into the build cycle earlier. Use linters configured to warn on generated-pattern antipatterns, and use light-weight runtime assertion tests at staging that crash early. Repair safety problems automatically (e.g., beautification, calls to removed functions), but do not auto-repair hiding intent.

- Behavioural Baselining And Anomaly Detection. Execute human-written code in managed environments and capture behaviour baselines: file system, network usage, CPU and memory consumption. Use anomaly-detection features to trigger alerting on straying from norms when executing code in production.

- Semantic Analysis, Rather Than Syntax. Static analysis is effective in the detection of shallow bugs. Integrate static analysis with semantic code analysis, doing reasoning on data flows, taint tracking and privilege boundaries. These approaches reduce false negatives on logic and escape faults common to generated code.

- Provenance-Aware Package Managers. Make package managers and dependency scanners report the reason for which a module has been written, the author of it, and whether it has generated code or not. Mark packages with significant generated content are at greater risk when scanning the supply chain.

- Runtime Protection And Micro-Sandboxes. Untested code receives runtime protection: least privilege execution, timeouts, circuit breakers and hard resource limits. Where appropriate, run newly compiled functions inside micro-sandboxes until safe to run at scale.

- Dual-Sign Releases For Sensitive Flows. For highly sensitive code blocks, maintain a dual-signature that is cryptographically secure: the developer and the approved security reviewer. The pipeline checks both signatures before deploying to high-risk environments. (ntsc)

Test early, track behaviour, and sandbox risky code. (Image Source: Automotive Testing Technology International)

Policy, Compliance And Regulation

- Regulatory Focus Sharpens. Systemic risk, consumer damage and supply chains are to be targeted by the regulator. Disclosure obligations on automated development technique usage, requirements to create secure-by-design and reporting controls on incidents of developer-helper use will be anticipated. Organisations must get ready by documenting governance and technical controls immediately.

- Liability And Due Diligence. Boards and legal operations need to consider whether code-generation tooling alters liability. Due diligence involves a training log, documented policy, secure CI/CD controls, and incident response plans with generated artifacts.

- Industry Standards And Collaborations. Industry consortia will advance standards for secure model-augmented development. Get in early. Participation in standards-setting sets expectations and can lead to interoperable tooling that simplifies compliance.

- Auditability And Evidence Trails. When auditors and regulators come knocking, what they’d like to see are audit trails. Preserve artifacts: prompts, release model, review comments, scan results and approvals to release. Audit windows should be the default in retention policies.

Businesses face significant risks deploying AI in a fragmented regulatory environment, leading to challenges like data privacy breaches and algorithmic bias. Implementing automated AI governance platforms offers a solution by continuously monitoring compliance and creating… pic.twitter.com/fP6NkW1NTX

— Robert Johnston (@AdluminCEO) October 22, 2025

Changes In Culture That Last

- Incentivize Safety-First Culture. Reward catch-and-fix stories as wins in resilience, not losses in productivity. Clarify that a release being delayed due to a security patch is a badge of maturity.

- Reward Safe Authorship. Reward and recognize secure delivery with speed, career reward and recognition for teams and engineers. Secure coding as a career.

- Democratise Knowledge. Utilize shift rotation security “on-call” whereby a security engineer guides feature teams through high-speed sprints. That evens knowledge and reduces blind trust in tooling.

Executive Playbook

- What Boards Must Insist. Boards must be asking: What percentage of code is copy-pasted? What’s guarding high-risk areas? How quickly can we triage and remediate a copy-pasted vulnerability? The answers must inform investment in tooling and expertise.

- Invest In Measurable KPIs. Measurement of metrics: generated-code coverage, review latency, source-based vulnerability density, mean-time-to-remediate, and incident rate due to generated snippets. Use these as KPI to inform resourcing and policy.

- Resilience Budget. Invest the physical budget in model-governance tooling, deep scanning, and training. Invest this budget as an insurance for the digital spine of the company.

Also Read: The Agentic AI Revolution: Hype to Factory Floor

Final Practical Checklist

- Build a short code policy sensitive and use it on tiers.

- Save provenance for every generated snippet.

- Enforce CI gates using semantic and behavior verification.

- Quarantine the new code produced in sandboxes before checking.

- Apply senior reviewer approval to bulk change.

- Exposes engineers to safe patterns that are consistent with global generation failure modes.

- Monitor KPIs and report monthly to executives.

- Stage legal and incident playbooks with generated artifacts.

Closing Thought

Machine code generation alters production patterns, not secure design practice. High-speed output with decreasing returns of commensurate checks stacks the risk. Organisations that can appropriate the speed dividend through investment in people capability, tooling, and governance will convert potential crisis into a competitive advantage. Others will have a terrifying attack surface to remediate at crippling cost.

Frequently Asked Questions

- Q: Can we totally ban code generators?

A: You can, but at the cost of near-term productivity benefits for longer-term delivery. Bans will push tool usage underground. Better alternative: managed adoption with open guardrails and review requirements. - Q: How to separate benign from malicious generated code?

A: Be careful around context. UI markup snippets or test stumps are low risk. Anything that touches auth, crypto, data sanitizing, file system, or external code call has to be under rigorous default-resistant scrutiny. - Q: Is scanning enough for security?

A: No. Scanners detect only known patterns; scanners can’t observe semantic logic and context bugs. Use scanning complemented with provenance protection, runtime guards, and human audit. - Q: Are we logging prompts and results?

A: Yes. Model versions and outputs, plus logging prompts, give a forensic record for incident response and auditing. Log sensitive data and adhere to privacy law. - Q: And with code generated and open-source dependencies?

A: Handle them as amplified risk. Use dependency scoring on contributor trends and provenance of code. Use backported patches or use an internal fork for main libraries. - Q: How do we handle a vulnerability discovered incidentally that affects many services?

A: Prioritize by exploitability and data exposure. Roll out mitigations lowering the attack surface first (feature flags, extra runtime checks), followed by code fixes. Be transparent to customers and downstream consumers. - Q: Do model optimizations in the future fix these issues?

A: Repairs but risk is transferred. Even nearly-optimal generation can show context-mismatch bugs or be prompt engineering. Human wisdom and the rule of law are still needed. - Q: Is a generated code snippet ever risky?

A: No. Most output functions and safe to use in simple scenarios. Danger in depending on security without analysis. High-risk code (auth, crypto, input processing) must be professionally vetted. - Q: Are larger models more secure code?

A: No. Recent studies have found security performance to be reasonably model-size independent; risk is a function of training data, prompting, and testing techniques. - Q: Will auto scanners address these problems?

A: They do. Static analysis, dependency analysis and fuzzing catch a lot. But semantic bugs, logic bugs and error handling are human territory.