A New Era for AI Acceleration

A look ahead to 2025: The competition to develop more intelligent and much faster and more powerful models is no longer a contest centered on research and development involving algorithms and big data. It is now all about chips and servers for artificial intelligence. As recent announcements from leading companies confirm, there is now a 10x boost being realized with the next-generation chips and servers for artificial intelligence.

These trends do not represent continued incremental progress. Instead, this is a paradigm shift with regard to the structure and function of AI: from laboratory-scale models to rapid deployment at speed and at scale.

In this article, we’ll delve into what is driving this shift and examine the people and entities behind the development and functionality of this hardware and what this means for developers and business leaders alike. (deloitte)

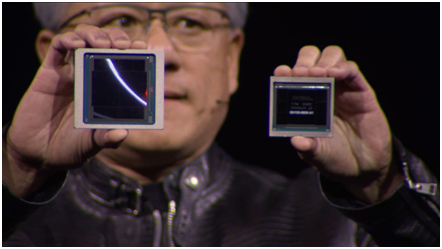

Next-gen AI chips are driving a 10× boost, taking models from lab to real world. (Image Source: Latest Tech News, AI & Gadgets 2025)

Why Newer Hardware is More Important than Ever

For several years now, the backbone of computing for artificial intelligence has been the GPU: the graphics processing unit that was designed specifically for gaming and graphics. The GPU is so well adapted for computing because it can process multiple operations simultaneously and is much more suited for this work than traditional computing chips.

However, the year 2025 is witnessing a pace of innovation for hardware that is unlocking these frontiers. Chips are now being developed for artificial intelligence tasks with the inclusion of high-bandwidth memory and interconnects that facilitate the streaming of data from multiple chips. The effect: speed and efficiency improvements that were orders of magnitude higher than was possible before.

Who’s Leading the Charge?

NVIDIA is still a giant. Its newly released “Blackwell” architecture, particularly for server-class graphics cards, provides a boost of up to 5x more FP4 throughput than previous solutions and offers inferencing throughput for large models that is 30x faster than previous solutions. (developer.nvidia)

However, NVIDIA is not alone in this. Others, such as AMD and Intel, release highly advanced accelerators with substantially larger amounts of memory bandwidth. This allows for efficient operations with billions (and even hundreds of billions) of parameters.

On the other hand, software and hardware co-design is on the rise systems that do concurrent optimisation on both software and hardware to maximise possible speed benefits with balanced power and cost.

NVIDIA Blackwell leads, with AMD, Intel, and co-designed systems boosting AI performance. (Image Source: Tom’s Hardware)

Real-World Impact: What 10x Faster Means in Practice

From Data Centers to Consumer Devices

It wasn’t all that long ago that highly advanced AI models such as large language models (LLMs) were forced to run on behemoth servers within state-of-the-art data centers. This is no longer the case thanks to next-gen chips and server design.

Enterprises can now run highly complex models with lower latency. Startups and enterprises that could not afford to implement AI solutions can now take advantage of superior models at an attractive cost. Applications ranging from voice recognition to real-time translations and from recommendation systems to creativity can be implemented in real time.

The Evolution from LLMs to AI Agents in 6 Simple Phases

Most people don’t understand how basic LLMs become autonomous AI agents.

Here’s the exact progression path:

Phase 1: Basic LLM

– Input → LLM → Output

– Just text processing within context windows.Phase 2: Document… pic.twitter.com/xWAvkwmHn9

— Python Developer (@Python_Dv) December 7, 2025

In summary: AI is now advancing from “lab experiment” to “on-the-ground reality.”

● Lower Costs

More computational power and efficient use of memory and power allow advanced AI hardware to lower the expense of queries and tasks. This means that advanced AI is more available for small players and does not merely pertain to tech giants with huge wallets.

● Higher Efficiency

With reduced energy per computation and the aggregation of computing power on a chip, such chips offer solutions to the escalating costs associated with the environment and infrastructure, which have remained a significant issue with the adoption of AI on a larger scale.

● More Sustainability

Greater efficiency and lower energy use per operation contribute to reducing the environmental footprint of large-scale AI deployments, addressing one of the persistent criticisms of AI infrastructure growth.

The Bigger Picture: The Race Has Shifted as We Enter 2025

Supply, Prices And Rivalry: More than Just Performance Criteria

In recent years, the demand for AI compute has skyrocketed. Large companies scaled their infrastructure capacity very aggressively.

Now, we’re facing the pressure: increased power demand and a scalability crunch. The only solution is more energy-efficient and more powerful and more scalable computing hardware.

2025 represents a point at which the growth rate is dominated by the advancements made by hardware and this isn’t just a function of model design and available data.

Democratisation of AI

With more efficient and less costly (relatively speaking) hardware being developed, more and more people can afford to utilize powerful AI. Small companies and research labs can now think about developing and applying AI solutions they could merely imagine before.

This disrupts the whole ecosystem: the playing field is levelled, innovation happens at a much faster rate, and the intensity of the competition is not limited to large corporations alone.

Affordable AI hardware lets smaller players innovate, levelling the field for all. (Image Source: Medium)

A New Arms Race: Infrastructure Defines Leadership

Companies with the ability to harness powerful computing hardware or create and customise such hardware now have the advantage. It is no longer a case of whose smartphone model is smarter. It is now a case of whose system is faster and more reliable.

In the year 2025, the AI challenge is no longer lines of code; instead, silicon, interconnects, and memory.

What does this mean for you: Reader, Developer, or Observer?

As someone who builds and implements large-scale and small-scale infrastructure, now is the time for you to take a look at your infrastructure investments and consider upgrading to more efficient technologies that can eliminate the latency and expense associated with current servers.

If you’re a tech leader, think about the impact on current and future scalability. “Hardware is now evolving at such a pace that the dangers of being left behind can be severe, especially for companies that want to be more than just industry leaders.”

If you’re tracking AI trends, A whole host of new services and applications can be expected. Faster and lower-cost AI solutions may lead to innovative and more intuitive solutions than were possible before.

NVIDIA Blackwell / Blackwell Ultra: A Safe-Money Play

The Blackwell family from NVIDIA leads the discussion as it offers overall throughput power with a software stack that the firms trust and uses fully. The recent benchmarks and figures from the vendors reveal that servers with the Blackwell architecture can speed up specific families such as mixture-of-experts and large transformers by one order of magnitude. It is for this reason that the tech press and analysts mention Blackwell again and again as the near-term throughput game-changer.

Why it Matters

For companies offering production-grade model inferencing capacity today, such as Blackwell, the ability to remove latency and expense from production model inferencing pipelines can be a game-changer within unit economics and overall user experience.

AWS Trainium / Amazon Nova / On-Prem AWS: Cloud at Speed

However, cloud providers do not just wait for demand. AWS ‘2025’ announcements, such as the Trainium accelerators with greater capacity and so-called AI Factories, demonstrate cloud providers scrambling to supply equal benefits of the 10x class server providers offer and more with cloud elasticity and cloud services benefits. For enterprises, this means they can enjoy next-generation acceleration without having to procure servers and employ server operations staff.

Why it Matters

For startups and organizations that would like to use OpEx instead of CapEx, having cloud access to high-speed chips means they can innovate and scale more easily and efficiently. More production solutions now use managed instances that abstract interconnects and firmware and tuning development.

AMD’s Helios Server And Integration With HPE: Standards And Ambition On a Rack Scale

“AMD’s Helios rack system design, now embraced by HPE for deployment starting with 2026 models, is a whole other approach: more open rack-scale servers with Ethernet interconnects and very large pools of HBM per rack. Helios delivers on the notion for extremely dense servers and offers an open stack solution for interconnects. The early participants demonstrate a deep interest in this method for research and enterprise servers.”

Why it Matters

Helios propels the industry towards choice. Not all customers want and require vendor-optimised fabric. With open rack solutions, hyperscalers, as well as universities and labs, can design around industry standards and perhaps offer reduced TCO.

Software And Hardware Co-Design: The Quiet Multiplier

However, one thing emerges across coverage and analysis: the greatest improvements happen when hardware experts and model developers start collaborating with each other from the beginning. When hardware and software co-design together, this creates opportunities for the hardware to offer primitives that can be leveraged by software and for software to influence the pipeline on the chip. Analysts would now refer to this as the “symbiotic revolution.”

What Matters

As a machine learning engineer, I look forward to more APIs and model forms tailored for specific silicon. This means that optimization decisions will lie between model design and silicon-related code.

Which Sectors are Affected First?

- Consumer Apps (Real-Time/Low Latency Is Best)

Voice assistants, translation booths for simultaneous translation, creative software enabling real-time creation, and interactive games prioritize low latency above almost any other. A 10x acceleration allows for lower latencies while enabling more complex models to be housed locally and in nearest-edge data centers. This leads to faster UX and unlocks new use cases that wouldn’t be viable before. - Advertisement, Recommendation, And Personalization: Throughput Counts

They handle millions of queries a minute. Faster hardware means lower cost per thousand inference calls and more complex models in ranking and personalization loops, a direct revenue driver for platforms and publishers. Expected action: immediate investment. - Finance And Trading (Time Is Money)

High-frequency trading strategies and risk management platforms operate in milliseconds. Faster inference and lower latencies translate to superior risk management and better algorithmic trading strategies. Financial firms continue to use custom racks and co-lo servers for optimising trading. - Healthcare Imaging & Diagnostics (Accuracy & Timeliness)

A faster and more economical processing of scans means that hospitals can expand their screening volumes and provide near-real-time diagnoses. This huge social and economic value proposition is where the benefits of advancements in hardware translate to public benefit. - Research, Science And Modelling (Scale Facilitates Discovery)

Large language models and multimodal models that used to be relegated to research purposes are being brought closer to mainstream use in scientific studies. Helios-style racks and cloud-based AI Factories make large experiments more affordable for universities and laboratories.

The Hard Problems: Supply, Sustainability, And Security

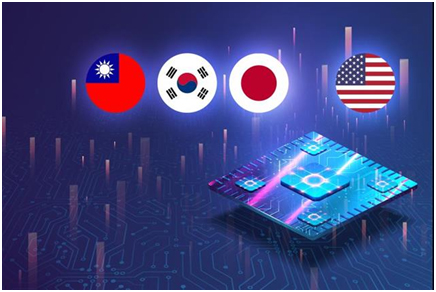

Supply Chain & Geopolitics

Chips don’t materialize out of thin air. Fab capacities and a typical limit for wafer sales to TSMC via geopolitics affect who gets access to new hardware when. This leads to a competitive advantage being determined by who gets access to the hardware first. A tighter integration between OEMs and fabs, with a focus on cloud providers, would be expected.

Energy & Cooling

Even with more efficient chips for a given operation, a tenfold increase in usable throughput invites much more compute and much more heat. Liquid cooling, use of waste heat, and data centre site design are going to become mainstream engineering issues. Any projects aiming for heat recirculation or liquid cooling would be ahead of this curve.

Security and Adversarial Risk

Campaigns

Faster inference and lower compute cost make attack simulations, adversarial example enumeration, and training models for malicious use cases cheaper. The defensive side needs to secure model use and watch for unusual inference activity with scaled governance.

The Talent Gap

High-performance system engineering remains a specialist field with a focus on collaborations between model code and compilers. Companies with a wide range of engineers involved in hardware, system software, and machine learning contexts are going to unlock more. A signal that model engineers with a silicon background are scarce.

AI faces chip limits, heat, security, and talent shortages. (Image Source: DIGITIMES Asia)

Practical Advice: What to Do Now (CTOs, ML Leads & Product Teams)

Audit your inference stack. Trace out current latency and cost-per-query. Determine where bottlenecks exist and can be remedied with new hardware.

Launch your first cloud instances. Workload test sensitivities using managed fast instances. A trial in the clouds cuts risk but proves real-world benefits.

Invest in profiling and co-design. You need to employ/hire engineers with expertise in model profiling. They would be a great asset for your success in reaching 10x.

Talking about sustainability means talking about capacity planning when thinking about racks hosted onsite. Liquid cooling and waste heat recovery are no longer optional when considering high density.

Closing: Performance is Now Infrastructure

Speed no longer resides only in model code or clever research papers. Today, in 2025, speed resides in racks, in firmware, and in the subtle battle between closed-speed and open-scale architectures. The firms that will prevail are those that recognize that hardware considerations are strategic, incidental.

This means that when investing in a new build of AI this year, consider asking three questions: would it be possible to test this new hardware cheaply? Would your software development group be able to co-optimise with this new hardware? And would it be possible to scale this hardware while considering cooling and governance?

Commonly Asked Questions

- Q: How are more recent semiconductor chips capable of processing AI models significantly faster than before?

A: New chips come with special architectures such as high bandwidth memory (HBM), faster interconnects for inter-chip data transfer, and tensor/matrix processing cores for dealing with massively parallel tasks typical in modern machine learning. This results in much more processing capability than a GPU and a CPU. - Q: Will this translate to more affordable running of the AI models?

A: Yes. As newer hardware pieces are more efficient and perform more computation per unit of power consumption (or cost), this brings down the price for each model. This widens opportunities for smaller firms and individuals to deploy larger models. - Q: Are there any negative aspects associated with energy consumption?

A: In fact, the converse: newer hardware is more energy efficient per calculation. People are optimizing for performance as well as for power efficiency because this makes larger-scale machine learning work more environmentally responsible. - Q: Does such hardware innovation imply a reduced importance for software and models?

A: Not at all. Hardware lays a foundation, but without strong software, optimized models, and smart engineering, one won’t be able to unlock this potential. Today we’re witnessing a synergy: hardware + software + architecture co-design. - Q: Are ‘10x faster’ speeds realistic?

A: Yes – but with caveats. Those benchmarks with tenfold gains are often focused on particular model families and use optimised software stacks. How much average improvement one might see depends on the workload. But in most cases, several-fold gains are possible today. - Q: Should I move everything to the new chips?

A: No. Pilot runs first on cloud compute instances. Assess cost, latency, and fidelity. Some LOs may be more amenable to this model than others. - Q: Will this kill smaller AI players?

A: The contrary. A lower price means more access. A cloud providing access to superior hardware and a secondary market for optimal inference engines enables smaller groups of developers to implement advanced functionality more quickly. This leads to more competition, not less. - Q: Does Helios pose a threat to Nvidia’s dominance?

A: What Helios brings to the table is a strong open-rack offering, particularly for customers who prioritise standards. While Nvidia has had a strong lead in this regard, offerings such as Helios help provide more diversity for customers. - Q: What won’t change quickly?

A: Breakthroughs in model research and algorithms are still a critical component. Hardware makes adoption easier and smoother, but architecture and data quality still matter.