A Historic Agreement Shakes Up the Semiconductor Platform

AMD and OpenAI reveal a multibillion-dollar infrastructure deal that makes a massive statement: compute is the crown jewel, and AMD wants more of it. Under the agreement, OpenAI is to have access to the equivalent of six gigawatts’ worth of AMD GPUs, and the first large-scale rollouts based on the next MI450 family from AMD are in the second half of 2026. The agreement also includes a clause that would see OpenAI purchase up to about 10% of AMD, contingent on milestones (The Guardian).

AMD and OpenAI strike a landmark deal: 6 GW GPUs, MI450 in 2026, and a potential 10% stake. (Image Source: LinkedIn)

That shockwave hits Wall Street before long. AMD shares roar, adding tens of billions to its market cap as analysts re-price the firm for a potentially massive AI top line. Meanwhile, Nvidia holds high ground in modern compute. It surpassed about $4 trillion market value earlier this year, and thus puts this transaction into context as an out-and-out bid to rebalance the data-center balance of power (Investors).

In perspective, AMD made about $26 billion in revenue last year, and this new deal has the potential to make the company’s AI push an even bigger business swing. The chip war is increasingly focused on longer-term supply deals and diminishingly on product launches, strategic investment, and compute capacity politics (Yahoo Finance).

Why It Matters: A Brief Primer

Compute is a fossil fuel of the information age. Platforms, models, and services need huge amounts of power and bespoke silicon to operate. The top, notwithstanding, company wins business contracts with cloud stores, AI labs, web titans, and governments. That’s not bragging rights, it’s repeat business, negotiating clout, and long-term power contracts. AMD’s takeover makes it a kingmaker candidate for giant model rollouts, a role that was the sole preserve of one company (Businessworld.in).

The Players And Their Crowns

- Nvidia: De facto standard for training and inference on giant models. Its software stack (CUDA and more general developer mindshare), fab relationships, and insatiable perf increases put it at the top of modern AI compute. Its market cap is testimony to that supply-side dominance (Reuters).

- AMD: The veteran competitor. It has transitioned from desktop CPUs and discrete GPUs to data-center accelerators with Instinct lines and a more integrated approach. The OpenAI alliance propels AMD out of the chip business. It is now a provider, partner, and possible owner of one of the world’s best-known AI labs (The Guardian).

- OpenAI: The buyer with both purchasing power and influence to set whole markets. Securing enormous AMD capacity is a move that reduces reliance on a single supplier and enhances availability options for hyper-scale training (The Wall Street Journal).

The “$26 Billion” Frame: What It Actually Signifies

Your lead sentence, “AMD’s $26 billion bet,” lands on a figure already tallied: AMD’s trailing revenue was approximately $26 billion last year. The deal brings into focus how the company will be able to leverage that foundation and convert it into sustained growth via data-center GPU shipments and long-term support arrangements. That is, AMD isn’t spending $26 billion; it’s betting its next growth cycle on translating its current size of business into disproportionate AI revenues (Yahoo Finance).

Analysts already have a scenario in mind. Market commentary and analyst estimates indicate AMD is able to capture double-digit billions of incremental revenue annually as MI-class GPUs ramp into manufacturing, and other estimates indicate AMD capturing a large percentage of new AI spends in the event of deployments. Those estimates are on the high end and are subject to execution, production volumes, and software ecosystem victories (Wccftech).

$26B marks AMD’s revenue base, now aimed at fuelling major AI growth. (Image Source: Indian Retailer)

The Technical War: Chips, Power, And Software

Training large models takes more than bare floating-point ability. It needs memory bandwidth, interconnect scale, thermal efficiency, and sympathetic data-center design. AMD’s MI450 architecture translates into those needs: more compute density per rack, improved memory footprints, and efficiencies worthy of note when we’re talking gigawatts of deployed capacity. OpenAI’s one-gigawatt facility design using MI450s eliminates that concern with density and cost of ownership (The Guardian).

But the hardware will not win the war by itself. Developer tools, libraries, and take rates of ecosystem adoption break or make adoption occur. Nvidia’s software stack remains a monolithic moat. To convert capacity to share, AMD must get partners to find performance, reliability, and tooling as good or better than incumbents in production workloads. That is not a thing that occurs overnight and needs orchestration across silicon design, firmware, compilers, and cloud partners (The Guardian).

The Economics Of Geometry: Why Contracts Beat Chips

A single product launch sells promise. Infrastructure deals for the long haul sell assurances. When an OpenAI category of buyer commits to hundreds of thousands of chips versus gigawatts of installed base, that contract pays for factory allocations, priority vendors, and partner investment in tooling; money for the supply chain. AMD wins not just units. AMD wins market signaling and the opportunity to buy capacity ahead of the competition. That’s something worth a premium both in top-line forecasts and in investor attitudes (Investors).

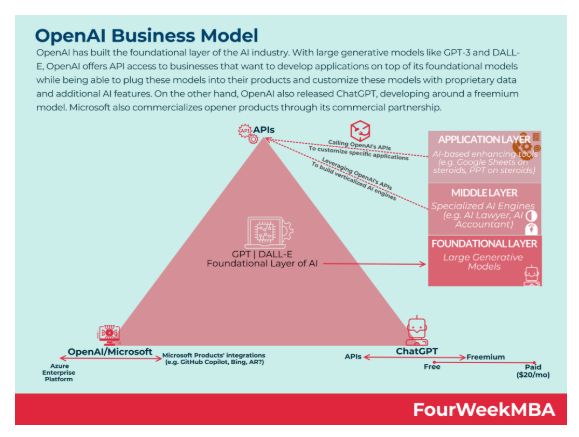

Big contracts secure AMD supply, signal market strength, and give it a capacity edge. (Image source: FourWeekMBA)

The Ripple Effects: Suppliers, Partners, Geopolitics

Ginormous GPU sales ripples. Winner follows TSMC foundry demand, partner board assembly at Sanmina, and power and cooling supply chains for new fabs. Nation-state export controls, licensing, and regional supply dependencies, too. And as companies diversify supply on AMD, Nvidia, and others, countries and cloud providers reassess procurement strategy and policy response. That is why this is a business and geopolitics tale (Crunchbase News).

Human, Brief Note: Sense Of The Moment

Observe markets over time, and you witness human narratives: the upstart, the in-your-face challenger, the warning sign incumbent. Investors smell re-rating. Engineers eagerly await the opportunity to build alternative systems. Cloud architects rephrase the math and vendor lock-in. It is a story of power at its core, not power electrical, of course, but strategic and commercial. AMD’s move can be understood as a mandate: we will not be second best. We wish to lead (Investors).

Early Doubt And Actual Risks

Optimism carries risk. Analysts warn that forecasts often rest on optimistic uptake curves and supply assumptions. OpenAI’s expansion plans are large and capital-intensive; execution risk, factory yield, software maturity, and geopolitical license changes can blunt gains. And even if AMD secures huge orders, converting those into sustainable market share against entrenched developer ecosystems remains a tall order (Wccftech).

Quick Checklist: What To Watch Next

- Ship timelines: MI450 actual silicon availability and initial racks into production (The Guardian).

- Supply chain traction: TSMC and board-partner capacity milestones (Investors).

- Software leadership: Performance on actual training workloads versus leading stacks (The Guardian).

- Contract milestones: Any published milestones for share warrants or revenue milestones (The Guardian).

Technical Battle: MI450 Vs Nvidia Leadership

Get real: silicon specs don’t game, but they set the playing field. Nvidia’s new Blackwell generation and old H100/Hopper one are the benchmarks. Nvidia combines hardware with a massive software stack; compilers, profilers, libraries, and a developer mindshare that teams do not abandon in a day or night. That software moat, plus NVLink and ultradense interconnects, makes Nvidia the default choice for much hyperscale training work (NVIDIA).

AMD’s MI450, on the other hand, presents a contrasting proposition: performance per watt and total cost of ownership approach. The MI-class processors are leading in memory bandwidth, package density, and rack-level efficiency, just the dials customers want to turn when deployments are megawatts and gigawatts. If MI450s deliver on those lab-to-rack metrics in real workloads, OpenAI’s bet becomes a powerful market signal that “alternative” silicon can cut operational costs without forcing a migration away from the software ecosystems customers rely on (Advanced Micro Devices, Inc.).

Two tough facts follow:

- AMD must have parity or better on core training workloads (transformer throughput, memory scaling, inter-GPU synchronization).

- It must simplify developers’ lives — either by porting existing toolchains or by offering an attractive, low-friction migration path.

In case you missed it.

Nvidia demonstrated a 6x speedup in training speed using their new NVFP4 format.

NVFP4 is baked into silicon of the latest generation Blackwell chips.

There is almost no loss compared to the previous FP8.

Just more speed.

The Nvidia moat deepens. pic.twitter.com/MnrpkgXzZO

— Jackson Atkins (@JacksonAtkinsX) October 6, 2025

The Choke Points: Foundries, Packaging, And The Hidden Supply Chain

Chips are born in fabs and packaged in supply chains that each has its bottlenecks. TSMC is the workhorse of the industry. Demand for AI accelerators trails demand for leading nodes and high-density package areas such as CoWoS and advanced ABF substrates. TSMC records revenues and firm, ongoing demand is reported, but that does not imply anyone gets their requirements on day one. Lead times, substrate shortages, and packaging capacity make up natural chokepoints favoring those with contracted-in demand or special supply arrangements (Reuters).

That is why a hyperscaler multi-year contract matters: it commits board-level priorities, package runs, and wafers. For AMD, the OpenAI contract can accelerate capacity commitments and constrain the “who gets first” sprint that has served incumbents well in the past.

Chip supply bottlenecks favor players with secured contracts like AMD’s OpenAI deal. (Image Source: TOPBOTS)

Business Model: Why Contracts Matter More Than Smart Chips

A chip’s peak-line FLOPS matter less than sticky, proven at-scale deployments. Long-term infrastructure transactions use future demand as present negotiating bargaining power. They enable AMD to negotiate better terms across its supply chain, coordinate factory runs against TSMC, and ramp board-partners and integrators in volume. For investors, an ongoing-revenue contract from a large deployment revalues AMD from a component company to a cloud-scale infrastructure company. Expect investor narratives to shift from product-by-product to “AI infrastructure as recurring revenue” (Advanced Micro Devices, Inc.).

Three Scenarios: What’s Next

Blue-sky (AMD succeeds, ecosystem converges)

- MI450 shows competitive training and inference capabilities.

- AMD, supplied with certainty and backed by partners, wins additional hyperscalers.

- Nvidia leads but loses share on some edge training uses.

- AMD’s re-rating of revenue and stock continues. This is the market snapshot of today.

Base case (niche market bifurcation)

- There are only two vendors out there.

- Nvidia owns high-end workloads and short-term mindshare.

- AMD owns big chunks where the cost of ownership matters (power-guzzling clusters, specialist inference).

- The industry is multi-vendor today, and a customer wins and supply stability.

Bear (execution mistakes)

- MI450 yield or software issues.

- TSMC or substrate constraints cause ups deliveries.

- OpenAI ramps down slower or ships back to Nvidia.

- AMD’s re-rating evaporates.

- Investors punish for disappointment.

What Does It Imply For Crypto Enthusiasts And Non-Crypto Readers

Short answer: More compute options, not necessarily directly affecting blockchains, but with second-order effects that are meaningful.

- For crypto engineers: A lot of cheap compute means better tooling — faster backtests, smarter oracles, more sophisticated trading models, and better on-chain analysis. Firms building MEV bots, liquidation prediction models, or risk-scoring oracles utilize less-expensive, denser GPU clusters. Even so, the times when GPUs skewed toward AI from proof-of-work mining had already bent the economics; significant miners now turn to ASICs or cloud computing. So this transaction doesn’t resuscitate mining, it reorders the competitive dynamic for compute-intensive crypto services and trading boutiques.

- For the rest of us: Get ready for cloud providers to introduce more differentiated pricing strategies. Multi-vendor supply takes away single-vendor chokepoints, eventually in favor of enterprise budgets and project timelines.

Cheaper compute aids crypto tooling, not mining, and brings more flexible cloud pricing for everyone. (Image Source: Coinbase)

Timeline: Watch And When

- H2 2026: First MI450 deployments on the horizon. Watch for first performance benchmarks and public case studies (Advanced Micro Devices, Inc.).

- Next 12 months: Monitor TSMC packaging capacity reveal and CoWoS wafer output guidance; both constrain ramp rate (Reuters).

- Earnings cycles: Monitor AMD reference to revenue-leading booked orders and AI accelerators booked; this will tell you whether contracts convert to cash.

- Developer benchmarks: LLM training and inference volumes in production settings will drive adoption. Community testing and third-party lab data will be visible several months following rack deployment.

Policy And Geopolitics: The Quiet Background

Tariffs and national industrial policy, and export controls matter. Supply diversification weakens leverage, but semiconductor geopolitics is more a boardroom and a capital issue now. Countries that are the home of fabs, data centers, and advanced packaging will sit at the new industrial table.

Practical, Fast Conclusions

- If you are an investor: Watch out for execution metrics and TSMC/package supply indicators. Contracts can re-rate companies, but only if deliveries and performance catch up (Advanced Micro Devices, Inc.).

- If you are an engineer or architect: Demand hard targets and migration tooling. The software story will tell you how easy it is to migrate workloads (NVIDIA).

- If you’re crypto: Optimize for less expensive training/inference at scale. Use it to innovate faster on models that guide trading, on-chain analysis, and security tooling.

FAQ

Q: Will GPUs get cheaper now?

A: Not immediately. Large contracts lock up supply but don’t drop prices immediately. Ultimately, new capacity and competition can push prices down, but wafer and packaging limitations put a floor on near-term decreases (Reuters).

Q: Does this place OpenAI in AMD’s debt?

A: The warrant aligns incentives but does not bind OpenAI too heavily. Large buyers such as OpenAI diversify to avoid single-vendor lock-in. The warrant aligns incentives but will not bind incentives (Advanced Micro Devices, Inc.).

Q: Is there a way Nvidia can retaliate in the form of price cuts or software plays?

A: Yes. Incumbents defend market share with pricing, single-source relationships, and software competitive advantages. Competitive responses will arrive on numerous fronts (NVIDIA).

Q: What should customers of the cloud do today?

A: Procurement flexibility. Ask providers to offer multi-vendor support, migrations, and total cost of ownership estimates on actual workloads.

Q: Does the agreement mark the end of Nvidia?

A: No. Nvidia remains the market share, ecosystem, and mindshare leader. This deal raises questions about that, but it doesn’t end it overnight. Nvidia scale and software remain titanic strengths (Reuters).

Q: Is AMD spending $26 billion on nothing?

A: No, the $26 billion is related to AMD’s existing size of revenue and top-line build. This is less a question of AMD spending an initial $26B in cash, more one of AMD leveraging existing business and new acquisitions to grow (Yahoo Finance).

Q: Is it really possible for OpenAI to own 10% of AMD?

A: The deal includes a warrant design that could allow OpenAI to purchase as much as around 10% of AMD on triggers. That would be an unprecedented alignment of buyer and supplier incentives (The Guardian).

Q: How large is the AI chip market?

A: Market leaders put the market for AI accelerators at hundreds of billions of dollars in the next few years; AMD executives refer to it as a multi-hundred-billion-dollar opportunity (Businessworld.in).

Conclusion: A Throne Never Stays Empty For Long

This battle between Nvidia and AMD is not a temporary product war. It is a strategic, long-term struggle for control of the pillars of the future. In making its pact with OpenAI under a multi-gigawatt, multi-year agreement, AMD is not merely selling chips. It is betting its future on becoming an authentic kingmaker in high-performance computing.

Whether or not this bold $26 billion gamble dislodges Nvidia from the trillion-dollar crown, one thing is for sure: the game of silicon is no longer who can make the most powerful chip but who controls the ecosystem, the supply chain, and the strategic alliances that set the margins on the next level of intelligence infrastructure.

To investors, policymakers, and developers, this is not stagnant. It’s the tremor of tectonic plates beneath the digital economy. Thrones can be broken, stolen, or confiscated, but never vacated for long.