Generative AI is no longer solely a tool of productivity and a creative aid. As of 2025, it firmly resides inside an advanced security arsenal. States are using it for intelligence analysis, cyber warfare, and psychological operations. Terrorist groups are utilising these same capabilities for their propaganda, recruitment, and disinformation efforts.

The barriers to entry have collapsed. What took state-level capabilities to accomplish can now be achieved with consumer-grade AI capabilities on AI platforms, with open-source models and cloud access. This is upending how power functions in this digital era. (disarmament)

This is not a future threat. It is an existing reality.

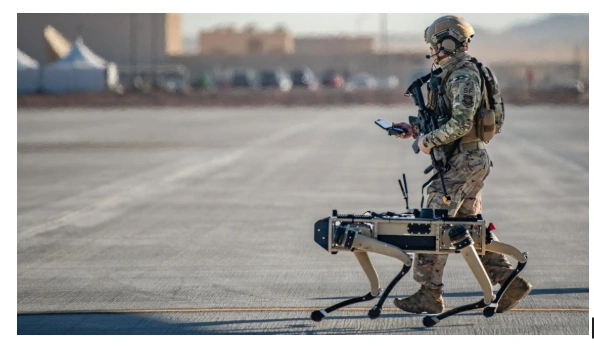

In 2025, generative AI is a powerful tool for both states and militants, reshaping digital power. (Image Source: United Nations University)

The Battle in This New AI Realm is Invisible but Constant

Weaponised AI in 2025 rarely takes the form of a missile or a drone. It takes the form of a convincing video. A localised message in a translated voice. A fake spokesperson who never existed. A barrage of coordinated messages is being put out at scale.

As a matter of fact, generative AI prospers in an information-dense setting. Such environments include social media sites, encrypted chat apps, and online message boards. They allow it to spread rapidly without being detected. Also, it will not be perfect when it is released into circulation. That is where all the real power is.

Why 2025 is a Turning Point

Capability by itself is not the point of change. Accessibility is.

The need for technical expertise in creating large language models, image generators, and voice synthesisers is minimal. Prompting technology substitutes programming. Templates substitute technical know-how. Terrorist groups do not require separate media divisions with specialised staff. Entire departmental work can now be performed by a small team.

For nation-states, the same capabilities deliver speed and scale. Their intelligence communities leverage AI to examine huge datasets, detect sentiment shifts, and forecast narrative impact in real time. Cyber teams employ AI to assess vulnerabilities and conduct reconnaissance.

Artificial intelligence will not replace strategy. It will speed it up.

How Militant Groups Are Leveraging Generative AI Today

Militant groups operate in relation to three broad domains: messaging, recruitment, and psychological effects.

Dynamic Propaganda

Generative AI technology enables content to transform quickly. The messages change in terms of their expressions based on reactions from the audience. As soon as a video fails to attract views, a better one emerges in a matter of hours. Such nimbleness renders traditional counter-propaganda efforts slow and less successful.

Localised Recruitment on a Global Scale

AI translation and voice solutions assist communities in speaking to local people without sounding non-native. The accents correspond with regions. Cultural in-jokes sound real. Job recruitment is not reliant on centralised sources anymore. Word-of-mouth recruitment is widespread.

Psychological Pressure Through Volume

Often, persuasion is not the objective. Often exhaustion is. Flooding online spaces with opposing narratives will bring about confusion. When people cease to trust the information they see, disengagement ensues. A vacuum is established, which serves extremist ideology.

Militant groups use AI to spread adaptable propaganda, recruit globally, and create confusion online. (Image Source: Global Network on Extremism and Technology)

Differences in How Nation-States Employ AI

States have wider agendas and more resources. Their roles in generative AI include intelligence, cyber operations, influence operations, and other domains.

Narrative Control Rather Than Persuasion

- State-sponsored AI warfare efforts can be driven by the need to influence event framing rather than thought. Minor modifications in framing can make elections, markets, and government policies sway. AI enables these campaigns to operate all day, varying depending on public response.

Cyber Operations With AI Support

- They help in breaching system weaknesses, creating personalised phishing attacks, and conducting reconnaissance. They make attacks quieter, quicker, and more difficult to detect. Attribution increases in complexity when AI is creating content.

Strategic Ambiguity as a Characteristic

- Artificial intelligence provides a way to achieve plausible deniability. When information is spread over various sites, it is not easy to connect it to a given actor.

The Deepfake Problem is No Longer Theoretical

In 2025, perfect deepfakes are not a requirement. Getting to them first is all that matters.

AI videos and audio files spread more quickly than fact-checking systems can cope with. Although they have been debunked, their first impression sticks. The correction does not reach as far as the original content. But this gives a new asymmetry. Reality takes longer than lies.

For security institutions, this is a major concern. A single clip may spark a protest, stock market fluctuation, or international conflict before a relevant response can be given. (weforum)

Why Detection is Struggling to Keep Pace

AI detection tools do exist, but they have three major challenges.

- First, generative models are improving quickly. They are a moving target for detection tools.

- Secondly, compression and redistribution weaken signals which are used by detection systems.

- Third, trust is falling. Even authentic content is doubted. Such a state of affairs, referred to sometimes as “reality fatigue,” is an added benefit to bad actors.

Everything being potentially fake means nothing can be trusted.

The Regulatory Gap Expands

Governments are well aware of this risk, but regulation trails capability.

Nearly all existing AI systems are concerned with data privacy, transparency, and consumer protection. Very few cover malicious usage in geopolitical and extremist environments. Jurisdiction creates another level of complexity. AI systems have global reach, but laws are country-specific. Militant organisations can take advantage of this easily.

The pace of regulation is slow when compared to code.

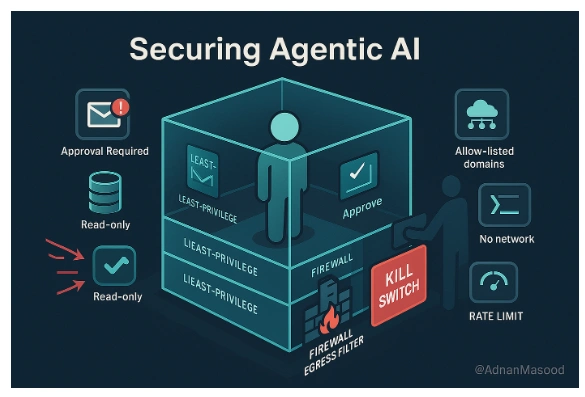

AI regulation lags, leaving gaps that militant groups can exploit. (Image Source: Kiteworks)

Technology Firms Are Right in the Middle of All This

Platforms hosting generative AI tools are increasingly under pressure. They have to strike a balance between innovation and safety responsibilities.

Some include better usage policies and enforcement systems. Others are based on post-hoc moderation. They all do not address this problem. Open-source models raise another level of complexity. They speed up innovation but decentralise control. They can neither be removed nor withdrawn after they appear. (wikipedia)

Such an impasse typifies AI governance in 2025.

Economic and Social Repercussions Go Beyond Security

AI weaponisation remains an issue not only for governments and terrorists. It impacts life in general.

Markets respond to AI-generated misinformation. Brands suffer reputation attacks fueled by synthetic content. Journalists have higher workloads to confirm sources. Citizens have a harder time distinguishing signals from noise. Trust will become the most precious and delicate asset.

Why Australia Ought to Be Very Concerned

Australia is not protected from such dangers.

As a digitally networked democracy with broad regional influence, Australia is a country affected by AI-powered information operations. Elections, financial markets, and public discourse make up a potential attack surface.

Australia faces AI-driven influence across elections, markets, and the Indo-Pacific. (Image Source: The Conversation)

Australian authorities are already tracking misinformation. But scale and complexity are now issues. Artificial intelligence alters these dynamics. The security environment in the Indo-Pacific region is also becoming more relevant. Influence operations using AI are increasingly borderless.

The Regulatory Race: Governing Generative AI in 2025

The critical starting point is clear: In December 2025, governments and their regulatory bodies proceed more rapidly and less in lockstep with the technology they attempt to control. The United States adopts a national AI policy in an executive order, while in the European Union, challenges arise in hosting and implementation of the AI Act. Meanwhile, guidance and standards of the industry come into effect in a patchwork manner.

They Respond; But With Varying Rapidity

A reality check now confronts regulators: laws and agreements work on a public schedule, but model updates arrive in an overnight shipment.

In December 2025, an executive order is issued in the USA, which focuses on developing a national AI policy strategy and minimizing a fragmented agenda driven by state laws. The order encourages government agencies to work towards synchronized rules and standards regarding enforcement. The goal of this strategy is to standardise regulation and remove friction, which implies moving from an ad-hoc strategy to a national one.

In Europe, the AI Act continues to be the model for risk-based regulation but is being rolled out over 2026-2027. Negotiations among member states and interest groups influence implementation parameters concerning enforcement for high-risk usage, including national security, with varying levels of tightness. The pace of global implementation leaves a lag where malicious actors exploit weaknesses.

In other regions, countries proceed at different speeds. While some countries establish stricter rules and liability concerning content targeting, other countries focus on their economies and thus have less aggressive regimes. In this way, these countries will face “regulatory arbitrage” in using generative technology with negative intent.

Industry and Platforms: Mitigation, Monitoring, and Messy Trade-Offs

Technology firms head this line. They are faced with weighing their level of policing and how much they can leave to their users and authorities.

Some sites make a heavy investment in detection systems, provenance metadata, and rapid removal teams. Others will tend towards constraining specific capabilities at the time of creation. In both these methods, a variety of trade-offs are involved. Excessive moderation can hinder innovation and speech. Insufficient moderation will allow problematic content to proliferate. Industry leaders present arguments for establishing better rules via legislation.

Open-source models are a very problematic case. They fuel innovation and research in academia. They do not fit into centralised control either. A powerful model, when released into the wild, undermines any efforts to ‘recall’ it by companies or governments. Here, a new reality check is in order. Downstream control and better methods of attribution are in order.

Tech firms must balance AI moderation with innovation, while open-source models demand stronger attribution and control. (Image Source: Medium)

Detection and Attribution: The Technical Arms Race

Detection technology improves, but this work is overshadowed by model breakthroughs. Three technology facts make defence harder.

First, the models grow more subtle and fingerprint-resistant with each new update. Second, malicious actors attempt to manipulate content, such as re-compressing a video, to circumvent those systems. Third, they operate on an enormous scale where human analysis is not feasible.

To address these issues, cybersecurity specialists employ a combination of automated identification, human/manual verification, provenance-level metadata, cross-platform intelligence sharing, and legal tools which hold perpetrators accountable for their actions. Public-private collaborations currently focus on threat sharing and quick verification. Such collaborations increasingly resemble emergency systems rather than traditional collaborations in tech.

International Cooperation: Messy, Necessary, and Incomplete

Weaponised generative systems have no respect for borders. A single operation can reach across these different networks and geographies.

Global institutions and blocs promote a common approach: a united labelling strategy for synthetic materials, a joint threat analysis, and a common sanctioning of countries using these tools for malicious purposes. The challenge: geopolitics. Great powers and capabilities influence both sides of this challenge.

Nevertheless, regional treaties and industry associations promote step-by-step progress. Such gains in practice include standard formats for provenance information, forensic toolkits, and quick response teams. They limit the time gap before mitigation when an attack is detected.

Examples of Real-World Policies With Promise

Several levers are tested by policymakers. Some actions have an immediate effect. They include:

- Forged Provenance and Watermarking: Forged provenance or watermarking information attached to all generated content can make tracing and authenticating forged content simpler. Disinformation operations will become less complicated to dismantle. Though implementation will need global cooperation.

- Rapid Response Threat Exchange: A way for governments and social media companies to exchange information about suspicious content and remove it quickly before it can go viral.

- Model Safety and Licensing: Regulators are encouraging a higher level of safety for high-risk models. This is in relation to risk assessment and licensing requirements for larger models. The licenses relate to obligations and audit trails.

- Public Education and Media Literacy: Governments have programs in place to educate people on how to think before posting and check information before sharing it. If people are educated, they will make life harder for misinformation actors.

Each of these steps can help. But all can have negative consequences if over-applied. They employ both technical and human elements.

They conduct red teaming exercises with these same generative tools used by an attacker. They teach analysts to detect subtle indicators of synthetic content. They develop toolkits to quickly check and communicate with the public in a way that alleviates panic when misinformation emerges.

Crucially, they correlate technical mitigation with public messaging. In a high-impact fake being discovered, an admission of uncertainty is important in lessening damage from a pre-existing video. Trust can be gained through humility and swiftness.

Ethical & Civil Liberty Trade-offs

Control and defence pose tough questions.

To what degree can content be monitored without entering into surveillance? Who gets to define content considered to be negative? Can a government or social platform filter content without introducing personal bias?

The design of policy incorporates more and more safeguards: judicial oversight for major take-down notices, third-party auditors for enforcement, and transparent reporting. The goal of these safeguards is to shield civil liberties despite combating organised harm. This line is very thin and always politicised. (arxiv)

AI control must balance civil liberties with safeguards like oversight, audits, and transparency. (Image Source: LinkedIn)

Practical Steps for Australian Institutions and Citizens

Overall, Australia finds itself in a very strategic spot. Here are things that make a difference in the present:

For Government:

- Push regional standards on provenance and threat intelligence sharing.

- Fund forensic laboratories to quickly confirm high-impact content.

- Encourage media literacy programs in digital scepticism.

For Platforms:

- Give priority to provenance metadata and invest in cross-platform takedown coordination.

- Provide a simple verification tool for such high-risk targets as electoral commissions and newsrooms.

For Citizens:

- Check before posting. Use reverse image search tools and official sources for news stories.

- Prefer following reliable sources for updates rather than sharing unknown sound bites.

- Report suspicious content; coordinated flagging leads to quicker action by platforms.

Such steps will not bring an end to this problem, but will prevent harm and make weaponisation more expensive.

Also Read: Artificial Intelligence Research Agent Redefines Global AI Competition

What a Resilient Future Might Look Like

A resilient information ecosystem will include technology, law, and culture.

Technology provides provenance, detection capabilities, and rapid verification. The law provides pathways to make perpetrators accountable and to demand safer uses. Culture shifts media literacy, professional standards of verification, and good corporate behaviour build social resilience against manipulation.

The interplay of these factors is more important than any individual law or model change.

Case Study Overview: Handling a Phoney Clip in 2025

A provocative video emerges on a variety of sites, featuring a public figure making inflammatory statements.

- Journalists mark this clip and transmit it to a verification hub.

- Forensics examines metadata and undertakes deep fingerprint analysis.

- Taking down a platform removes lots of reposts.

- The authorities put out a brief factual notice: “We are investigating.”

- Forensic tests establish a synthetic origin, and origin traces show an origin server in another country.

- The international partners work in concert on follow-up responses and attributions, with the platforms restoring context links pointing to this debunk.

While this series is not a perfect strategy, it is a type of playbook which decreases damage more quickly compared to the random pattern in previous years.

Conclusion

In 2025, generative AI is no longer on the periphery of global security but is instead an integral part of it. Terrorists make use of its speed and scale in order to control attention, while nation-states make use of it in order to affect behaviour and gain strategic leverage. The problem with this technology is not in it but in how quickly it warps truth, trust, and perception.

The way forward calls for balance. Governments have to regulate without choking innovation. Platforms have to respond more quickly and with transparency. Citizens have to teach themselves to hit pause, check, and challenge what is on their screens. Resilience in this case hinges on collective responsibility.

The future of AI will unfold. Whether AI will fuel instability or help build collective security will be shaped by decisions in policy circles, in server rooms, in newsrooms, and in online spaces.

Frequently Asked Questions (FAQs)

- What Does AI Weaponisation In 2025 Mean?

It refers to the use of generative AI tools with the intent to influence, disrupt, manipulate, or destabilise a society, a government, or an institution.

- Are Terrorist Or Militant Groups Utilising State-Of-The-Art AI Technology?

Yes. Much of the technology is affordable and widely accessible. Technical requirements have eased considerably, lowering the barrier to entry.

- What Makes This Different From Other Misinformation Campaigns?

Speed, scale, and agility. AI enables campaigns to react in real time and reach highly targeted audiences with pinpoint accuracy.

- Can Regulation Prevent Misuse Of AI?

Regulation can help, but effective enforcement and international coordination are essential for meaningful impact.

- Is AI Weaponisation Primarily A Future Threat?

No. It is already influencing information flows, security decisions, and public trust in 2025.

- Will Regulation Halt Weaponisation?

Not on its own. Regulation can mitigate certain risks, but enforcement, international cooperation, and technical safeguards must work together.

- Can Provenance Systems Be Faked?

Attackers attempt to do so. This is why provenance systems must rely on strong cryptography and be combined with network-level tracing and legal mechanisms. No single control measure is sufficient.

- Should Social Platforms Prohibit Generative Technology?

Broad bans often produce negative consequences and push activity underground. Targeted regulation, safe defaults, and effective enforcement are more practical approaches.

- How Soon Will We See Effective Global Standards?

Progress is slow and uneven. Incremental agreements around provenance and information sharing are more likely in the near term, while full harmonisation may take years and will be shaped by geopolitical realities.