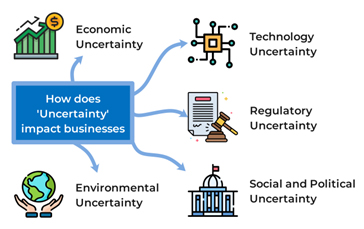

AI influences recruitment tools, medical diagnoses, financial approvals, and government services. Adoption is moving at a faster pace. Regulatory frameworks have found it challenging to keep up.

New York has just rearranged the equation.

The new AI safety act brought about by the state retains one of the most explicit and enforceable rules regarding the use of advanced AI on the active market today. This act targets risk prevention and real-world harm as its key goals.

Although this law affects the state of New York, its reach does not remain there.

For worldwide tech leaders, startups, and consumers, this is a point in time that serves as an indication of changes taking place. The regulation of AI is not just theoretical; rather, it is operational and unavoidable.

This explains why New York’s action has today influenced what responsible artificial intelligence will look like by 2026. (governor.ny)

New York’s AI safety law marks a global shift toward enforceable, responsible AI in 2026. (Image Source: TechCrunch)

The Core Facts: What the Law Does Right Now

New York’s AI safety law applies to high-impact and high-risk AI systems. These are systems and algorithms that have the potential to impact highly significant decisions or outcomes related to the following:

- Employment Decisions

- Credit and loan approvals

- Healthcare assessments

- In Public Safety and Essential Services

- Large-scale consumer data processing

The law covers high-impact AI in employment, finance, healthcare, public safety, and data. (Image Source: Yoshua Bengio)

The role of the law is to ensure that the organisations putting such systems in place accept responsibility for the outcome, and not just innovation.

In essence, the bill consists of three pillars, namely:

- Mandated Risk Assessment

Companies must assess the foreseeable risks before implementing AI technology.

- Defined Accountability Relationships

Blame rests with decision makers, not computers.

- Ongoing Oversight And Transparency

Artificial intelligence models have to be continuously monitored after deployment.

Such a framework will move AI research from the experimental to the infrastructure realm.

Why New York’s Approach Stands Out

Many places discuss ethics in AI. Few enforce ethics in AI.

The emphasis of the New York statute is not primarily on intent, but on effect. The statute boils down to this:

If this system does not work, then who gets hurt?

This is significant.

Instead, the law groups AI based on its impact on people, regardless of its size and brand name. This is because a small-sized algorithm with malicious intent can raise many questions compared to the larger one that is used for good deeds.

This resonates across the world since it reflects how AI works in reality.

Voluntaristic Ethics Vs. Forced Responsibility

Until now, the governance of AI has been based largely on voluntary principles.

- Firms had ethics pages on their websites

- “They promised fairness.”

- They self-audit

New York’s law goes beyond that point.

It raises the legal implications of negligence, the absence of supervision, and avoidable damage. The incentive scheme is now very clear:

Construct responsibly or not at all.

It also sets a standard for organizations operating within the US, Europe, Africa, and the Asian regions. The standard for one state becomes the standard for the whole world.

Why 2026 Becomes the Turning Point

The timing is important. By the year 2026:

- There are no longer any roles where AI systems help in decision-making.

- AI systems influence outcomes directly.

- Companies automate entire business workflows.

- The government uses AI systems in the delivery of services.

- Consumers engage with AI systems daily without even realizing it.

New York’s law comes at a time when AI tools are evolving into infrastructures.

That makes this particular piece of legislation more about stability rather than control. This is because it views AI as electricity, banking, or aviationpotent, useful, but dangerous if not regulated.

The Global Ripple Effect Begins Immediately

The concept of ripple effect implies that even firms that lack offices in New York are affected.

Why? Because New York affects:

- Financial markets

- Acquiring technology

- Global Enterprise Standards

- Legal risk frameworks

There are implications if it cannot satisfy the expectations of safety in New York; it raises uneasy questions among investors, partners, and clients.

However, the risk teams already address this by synchronizing the compliance of the organization on a global level and not on a regional level. That is how local laws rise to the status of global benchmarks.

New York’s AI law instantly impacts global firms, turning local rules into worldwide standards. (Image Source: Robert Stevenson)

Responsible AI Becomes More Than a Buzzword

Years ago, the ‘responsible AI’ was just nice-s. Finally, it becomes active.

The New York framework obliges an organization to:

- What harm looks like

- How bias manifests in reality

- In areas where automation pushes the bounds of ethics

- Whenever human supervision becomes mandatory

What this brings is transparency into internal debates. Lawyers are at the same table as engineers. Product managers answer ethical questions alongside technical questions.

Artificial intelligence development adjusts slightly. Trust enhances substantially.

Business Reality: Compliance Or Consequences

Some argue that regulatory environments hinder innovation. On the contrary, uncertainty kills innovation.

A clear set of rules enables firms to plan. They understand what is acceptable. They know where the red lines are.

For businesses, the provisions of the law result in the following immediate actions:

- Evaluation of AI-Facilit

- Charting automated decision chains

- Recording model behaviour

- Training teams on AI risk awareness

These efforts have costs. But lawsuits, damage to reputation, and consumer backlash cost much more.

Compliance beats costly lawsuits and reputational damage. (Image Source: LinkedIn)

What This Law Means and Why It Relates to You, Even If You

This problem affects all world governments.

- “They want innovation.”

- They fear injury.

- They do not have the technical capability.

The New York system provides an excellent blueprint.

It is free from ambiguity. It deals with scenarios. There is no ambiguity in the responsibility allocation. This makes it applicable in all systems of law, including common law systems such as Australia, the UK, and Africa.

This law becomes significant for policy-makers as it provides an answer to one of their most important questions: There could be several reasons why the progress of AI could be at risk.

The Human Side of AI Safety

AI safety exists because there exists a human context behind every regulation.

- A candidate who has been denied employment by the automated system.

- A patient was wrongly classified by a predictive model.

- A consumer was denied entry without a reason.

Rather, the experiences that the statute in New York focuses on recognize that AI failures compound faster than human errors. One faulty system impacts millions of people for hours.

That realization again puts the area of AI safety into a different context altogether.

AI failures impact people at scale, making oversight vital. (Image Source: British Safety Council)

Transparency Becomes Essential, Not Optional

The law propels transparency without necessarily calling for complete disclosure of intellectual models. Rather, it focuses on outcomes and explanations.

For transparency and trust to exist, we must ask:

- Why did the system make this decision?

- What data influenced the result?

- How can errors be challenged?

This will not obstruct innovation. It will increase trust. And trust becomes the most valuable currency in the adoption of AI.

Why Experts and Non-Experts Both Pay Attention

Experts are impacted by new models for governance and compliance. Thus, it is useful for laymen.

This indicates that at least someone is observing the machines making life decisions. This emotional certainty, no less than technical accuracy, is important.

A Patchwork Of Regulation: Why New York’s Law Matters Now

One of the greatest contemporary global challenges is that there is a lack of harmonization in AI governance, and different parts of the world are pursuing different paths.

Within the US, there has been a push for the development of a nationwide framework for Artificial intelligence by the federal government that aims for innovation and oversight, while also preempting state laws.

Meanwhile, other states, like New York and California, are pressing forward with their own standards. The Transparency in Frontier AI Act, a law in California, has already required safety disclosures and incident reporting by major developers.

This means that businesses operating in the US must prepare for different compliance criteria based on the state in which they conduct their operations. This can either be viewed as an inconvenience. Others believe that this indicates the kind of regulation that will follow in the global market.

“New York compliance” for global operation teams no longer means compliance with a U.S. state. Rather, it means being ready for how the government would like technology to act in the global environment.

Impact of The Law On the Industry Today

For example, in cities such as New York, Sydney, London, and Singapore, tech entrepreneurs are searching the law for applicable takeaways:

- Product design teams have come to incorporate safety considerations during the initial stages of developing products.

- Risk teams analyze how automated decisions impact actual human outcomes, ranging from credit approvals to the provision of community services.

- Attorneys construct international compliant plans to satisfy state, country, and international regulations and demands.

What this means for many organizations is revamping their internal playbooks. Safety strategies, reporting procedures in the event of an incident, and monitoring procedures are now mandatory, not optional aspects of product development and launch.

Reporting incidents is also critical. Based on the law enacted by New York, the developer has the mandate to report severe incidents like harmful conduct, data breaches, and misuse of the system within a specified time. This forces the company to address incidents that occur as they happen, and not when they occur, and people get harmed.

Industry Impact: Firms embed safety and report incidents to ensure global compliance. (Image Source: Compliance Hub Wiki )

What Other Governments are Doing

Its model has drawn the attention of policymakers around the globe due to its pragmatic and enforceable approach.

Take the European Union, for instance. The EU AI Act has already put forward “one of the most comprehensive frameworks ever proposed globally.” This legislation categorizes AI systems based on the risk level and prohibits the use of AI if it violates human rights, while requiring transparency and appropriate governance of high-risk AI.

This means that companies outside of Europe must already think about EU compliance when they serve European customers. The EU’s approach fits with the principle of applying local law where the consumer is affected, regardless of where the company operates.

In Asia, countries like Japan, Singapore, and India are also coming up with strategies, with ethical innovation and competitiveness being at the heart of these strategies. The multipolar world proves that the future of technology governance does not belong to one jurisdiction. Early leaders like New York and the EU drew up the blueprint.

Australia, for example, has indicated that it plans to embrace responsible tech standards. Australian regulators as well as lawmakers keep a close eye on developments in the likes of New York, as they weigh the extent to which they should move on their regulatory front. The guiding values of accountability, transparency, and human equity are in keeping with Australian digital rights policies.

Enterprise Leaders: Compliance and Opportunity

Smaller technology companies typically pose a crucial question:

“Does safety regulation slow innovation?”

The short answer is: Not if it’s done correctly. It transforms innovation, rather than stifling it.

For those with an international presence, timely compliance can become a key differentiator.

Consider aviation safety as an example. Nobody operates an aircraft without following extensive testing procedures in aviation because safety is an enabler of trust. A strong safety strategy will assure clients, collaborators, and funders that an organization places more value on trust as opposed to risk-taking without accountability.

Transparency itself becomes a part of brand value. Those that share their decision-making mechanisms in their systems foster a more robust relationship with the users.

Society and Public Trust

Society refers. Safety legislation not only impacts business. It also influences public expectations.

In every community, from Australia to New York, people ask:

- Do I trust an automated decision that impacts me?

- Who gets the blame when something goes awry?

- What are my options if I am injured?

These are not abstract questions. These issues affect employment, access to healthcare, access to loans, issues of fairness in the legal system, and issues of civic participation.

The law passed in New York provides clearer answers for its citizens: with the law in place, companies are now answerable and face consequences if they fail. The law provides standards with regard to transparency, fairness, and oversight. This has significantly led to increased confidence in technology.

If they witness well-managed systems, they can more easily adopt the system.

Trust: New York’s law boosts public confidence through accountability and transparency. (Image Source: PwC Australia)

What This Means for Australia and Similar Markets

The issue has great relevance for professionals and policymakers in Australia.

There is no general, enforceable AI safety law in Australia. However, trends such as the New York City law are being closely followed in Australia by regulators and lawmakers. The key principles mentioned are now being considered in any region that hopes for the benefits of technology and not the pitfalls.

Australian organisations using high-impact systems must prepare for:

- Audit where automated decisions impact individuals

- Record risk and safety procedures

- Construct monitoring systems under human control

- Be proactive with regulators

Such principles are essential for the protection of users and organizations alike.

Also Read: Australia AI Copyright Training Proposal Abandoned After Backlash

The Future: What Comes Next

Although New York’s law has already taken effect, there are busy days ahead. According to policymakers’ forecasts, the emerging trends will include the following:

- Cross-Border Cooperation

Legislators will also aim to strike a balance in standards so that companies can develop while avoiding discrepancies in laws.

- Industry-Specific Codes Of Practice

Healthcare, finance, transport, and law enforcement could create their respective guidelines.

- Certification And Third-Party Audits

Independent review could also become the norm for compliance, much as financial audits.

- Public Education And Literacy

Understanding the way automated decisions function is going to be as important as the legislation that regulates them.

All of these trends make one thing clear: responsible tech is a never-ending process, one that incorporates checks, balances, and human values from start to finish.

Conclusion

Although New York’s AI safety bill began as a local legislative effort, its impact goes worldwide. This is part of a broader transformation in the way that society seeks to regulate complex technology, not out of ideological aspirations, but out of risk management, accountability, and the public interest.

In business, it means learning how to build systems differently. In application, it means that users will trust these systems more. In policy circles, it’s a learning model for policymakers.

In the year 2026, responsible technology is no longer a mere philosophical notion. It has become a concrete and enforceable norm across the globe.

Frequently Asked Questions (FAQs)

- What is the New York AI safety law trying to prevent?

Ans: It seeks to mitigate foreseeable harm arising from the use of high-risk AI systems in real-world decision-making. - Is the applicability of the law restricted to large AI systems?

Ans: No. The law applies to all AI systems that create significant risk, regardless of their size, complexity, or brand. - Will this act impact businesses outside New York?

Ans: Yes. Many global companies standardise compliance across regions to reduce legal, operational, and regulatory complexity. - Is this law comparable to the EU’s AI Act?

Ans: The philosophy overlaps, but New York places greater emphasis on real-world impact and accountability rather than model classification alone. - Does New York’s law cover every organisation that utilises automated systems?

Ans: Not all systems are covered. The law applies to high-impact systems that significantly affect people’s lives, such as those used in health, legal, financial, or public services. These systems face the strictest standards. - Would foreign firms be impacted too?

Ans: Yes. If a system affects New Yorkers or is used by a company doing business in New York, compliance is expected. Many global tech companies harmonise policies to meet these requirements. - What happens if a firm does not comply?

Ans: Sanctions may include civil fines and mandatory corrective measures. The goal is accountability and learning, not punishment. Traditional models of accountability, where damage is addressed only after it occurs, are inadequate for systems affecting millions instantly. Reporting and oversight help prevent large-scale harm before it escalates. - Is this part of a broader U.S. trend?

Ans: Yes. The U.S. is part of a global movement toward responsible tech governance. Europe, Asia, and other regions are establishing similar guidelines. New York’s law stands out by demonstrating leadership in enforcement