Meta’s Reality Check: Are Today’s AI Systems Truly Smart?

At the AI Action Summit in Paris, amid lofty ideas and futuristic forecasts, one voice offered a sobering perspective. Yann LeCun, Meta’s Chief AI Scientist, urged the tech world to reconsider what we call “intelligent.” Despite their polished performances, current AI models, he argues, are far from truly smart.

They don’t think. They imitate.

Yann LeCun: AI mimics, not thinks ( Image Source: OfficeChai )

A Straightforward Rebuke from a Leading Expert

LeCun didn’t hold back. He criticised large language models (LLMs) — including ChatGPT and Meta’s own LLaMA — for being fundamentally restricted. These tools, he said, lack the essential qualities that define intelligence.

In a pointed remark, he said they’re “less intelligent than a cat.” That’s not hyperbole — it’s a challenge to the prevailing AI narrative.

With decades of experience, a Turing Award, and deep roots in machine learning, LeCun’s words aren’t mere opinion — they’re a serious call to rethink the field’s direction.

LeCun says LLMs lack true intelligence ( Image Source: Forbes )

What Intelligence Really Means

LeCun outlined four key capabilities that make up real intelligence:

- Comprehension of the physical world — understanding how objects behave and interact.

- Memory — the ability to store and retrieve meaningful experiences.

- Reasoning — drawing logical conclusions based on patterns and evidence.

- Hierarchical planning — the skill to break goals into steps and execute complex strategies.

By these criteria, current models fall dramatically short.

Why AI Seems Smarter Than It Is

Why do so many believe these systems are intelligent? Because they sound intelligent.

Language models are impressive at predicting what comes next in a sentence. This makes them seem articulate, even insightful. But it’s all an illusion. There’s no real understanding behind the words — just statistical guesswork.

LeCun likens them to “stochastic parrots” — mimicking language patterns without grasping meaning.

AI sounds smart but lacks true understanding ( Image Source: Medium )

The Limits of Language-Only Learning

It’s easy to be dazzled by AI that write poems or answer questions. But ask them to navigate real-world challenges, and they fall apart.

They’ve been trained on vast amounts of text, but life isn’t just language. It’s motion, interaction, and physical feedback. Without real-world grounding, these models remain brittle and shallow.

Plugging the Gaps — or Just Patching?

To address these flaws, companies have tried enhancing models with extras like image processing and retrieval tools.

Take retrieval-augmented generation (RAG), for instance. It lets models pull information from outside sources to boost their responses. Helpful? Yes. Transformative? No.

The problem isn’t missing facts — it’s missing understanding. LeCun compares it to slapping spare parts onto a machine that was poorly designed from the start.

LeCun: Features don’t fix AI’s core flaws ( Image Source: AgileBlue )

Meta’s New Vision: Models That Learn by Doing

LeCun’s team at Meta wants to flip the script entirely. Rather than pile on features, they’re pushing for models that learn from interacting with the world.

Meta wants AI to learn by interacting ( Image Source: MDPI )

Their concept: “world-based” learning. These systems wouldn’t just read about life — they’d experience it. By engaging with environments, they could understand cause and effect, build internal models, and simulate outcomes.

Not just talkers — thinkers.

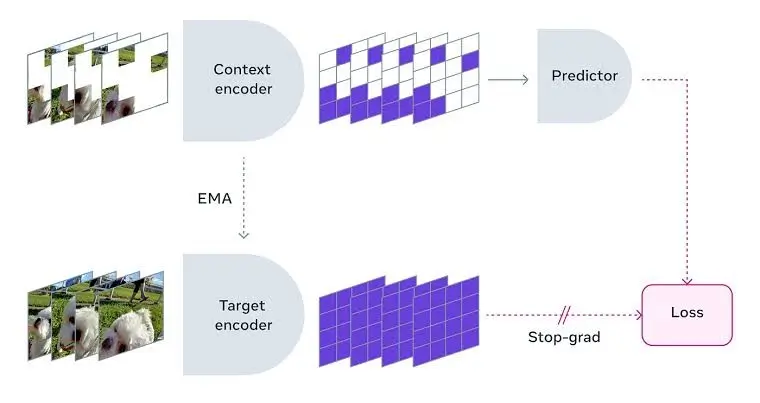

Introducing V-JEPA: Meta’s Step Toward Intelligent Vision

One example of this direction is V-JEPA, Meta’s experimental model that shifts focus from pixels to meaning.

Instead of processing every video frame in detail, V-JEPA learns abstract representations — much like how humans focus on important details and ignore distractions.

It’s a significant shift from earlier AI approaches that relied on brute-force data processing. V-JEPA shows what’s possible when models are designed to perceive, not just record.

V-JEPA: Meta’s move toward smarter vision ( Image Source: Cubed )

Going Beyond Recall: Reasoning and Strategy Matter

To LeCun, true AI must go past memory and enter the realm of reasoning and long-term planning.

Children learn not from books, but from trying, failing, and adjusting. Machines, he argues, need to do the same. AI Real intelligence comes from interaction, adaptation, and problem-solving — not mere repetition.

And current LLMs aren’t equipped for that.

Still Far from Human-Like Intelligence

Despite the hype, LeCun is clear: building AI that rivals human thought is still a long way off. A decade or more, by his estimate.

But the challenge isn’t discouraging — it’s inspiring. He believes the next phase of progress will come not from bigger models, but smarter frameworks.

It’s time to move from scale to substance.

LeCun: Human-level AI far off; need smarter models ( Image Source: AI Wereld )

Why This Should Concern Us All

As AI is deployed in more critical fields — from medicine to transportation — overestimating its abilities can be dangerous.

If we trust machines to make decisions they don’t truly understand, we risk serious consequences. LeCun’s warnings aren’t abstract — they’re grounded in real-world implications.

An Uncomfortable Truth at a Starry Summit

At the Paris summit, LeCun’s critique stood out. While others envisioned a utopian future, he reminded the audience of the present’s limitations.

His honesty cut through the buzzwords, forcing a necessary conversation about what progress really looks like We’re not there.