You can feel the ground shifting, in case you work in marketing. On one day, a person, in a video commercial, is a professional actor. Next, it is a perfect computer face that never has to be bathed, never grows old, never demands a pay increase, and is able to speak the same line in 30 languages before lunch.

That convenience has been a little too convenient.

Marketing is changing fast: yesterday it’s a real actor in the ad, today it’s a flawless AI face that never ages, never negotiates, and can speak 30 languages in minutes. (Image Source: mThink)

Marketing is changing fast: yesterday it’s a real actor in the ad, today it’s a flawless AI face that never ages, never negotiates, and can speak 30 languages in minutes. (Image Source: mThink)

New York now retaliates with a new regulation that goes after an expanding grey area in contemporary advertisement, of the human-like performers who are not, in fact human. The new legislation that was recently introduced in the state demands the transparent disclosure of advertisements that feature an artificial intelligence generated synthetic performer, and it is intended to reduce misinformation as AI controlled actors and avatars-driven advertisements are flooding feeds on the platforms.

This is not a policy footnote in the niche. It is a loud message to brands, agencies, and creators: in case you use synthetic people to hawk real products, you should clarify it. And since the law governs advertisements that are placed in front of the New York viewers when the advertiser is located in another country, it falls on the marketing desks of the world-in-a-jiffy.

In Simple Terms, What is the Law?

The synthetic performer law of New York provides that in situations where the advertisement consists of a synthetic performer created through AI, there should be a conspicuous disclosure made by the advertiser. The bill is S.8420-A / A.8887-B, which makes the addition of this disclosure requirement and civil penalties to the General Business Law of New York.

When Does it Start?

The law takes effect on 9 June 2026. That date matters. It provides brands with a brief window to scan ad libraries, influencer deals, creative workflows, and production pipelines, particularly the ones that are quietly relying on synthetic talent.

What’s the Penalty?

The civil punishment begins with a first offense punishable by US$1,000 and subsequent violations by US 5,000. The sums will not be intimidating to a global brand. However, fines accumulate, news spreads and regulators do not usually rest on a single swing.

Why New York is Doing This Now

This legislation does not manifest itself like magic. It appears precisely when synthetic performers cease to exist as experimental, and become a routine. That appears in the real world as:

- A skincare advertisement with a model that is non-real.

- A video tour of the property voiced by an artificial persona called a local guide.

- An influencer campaign centered on a computer-generated avatar that never reveals itself as being artificial.

- A style of clip, called a street interview, when the interviewed person is completely made up.

Customers have never been unaware that advertisements are refined. What they do not anticipate is the fact that people are being invented. The move by New York is a consumer protection gambit. It considers the use of artificial actors as a material truth: something the audience has a right to know before they start believing what they are watching.

The Definition That Transforms All: Synthetic Performer

Definitions is life and death of a law. The structure of New York is based on the idea of a synthetic performer through advertisements-human-trying or human-sounding performance generated or modified with the help of AI.

This definition is important since it encompasses a large portion of the modern ad workflows:

- Wholly created video talent.

- Computer generated faces superimposed onto bodies.

- Cloning of voices to be used as a narration in the form of a person.

- AI robots posing as actual speakers.

To say the least: it is not a matter of deepfakes of famous people only. It is also about the average fake presenter who is so authentic as to be a real person. According to the analysis of the statute in legal circles, it is pointed out that the definitions of AI are largely expansive in practice, especially with current tools integrating generation, enhancement, and replacement within a single workflow.

New York has passed a law requiring advertisements to disclose the use of AI-generated actors, while also banning deepfakes of deceased individuals without consent from their estates. pic.twitter.com/AECeqW46pL

— FearBuck (@FearedBuck) December 15, 2025

The Disclosure Has to Be Conspicuous. That’s Deliberate.

The legality of the law drives advertisers out of the traditional fine print liability loophole. A disclosure buried in:

- Tiny footer text.

- A fast-fading end card.

- Or a hashtag swamp containing less than 25 tags.

…is not allowed to cut it should the regulators determine that it is not conspicuous. The point is, it is what the viewers are supposed to see without having to strain. When you have been trained to squeeze compliance into the most inconspicuous part of the screen, this is when the exercise begins to appear dangerous.

Is it Applicable Beyond New York?

It is where marketing teams take their seats. The law is applicable to advertisements published to the audiences of New York, as well as advertisements prepared by out-of-state advertisers. It implies that an agency in Sydney could do a campaign on behalf of a global customer and still activate the needs in New York in case the ad is served into New York.

It can become your problem very fast in case you purchase programmatic, do paid social, push YouTube pre-roll, sponsor creator content or bully up influencer posts that reach New York.

What Kinds of Ads Are Exempt?

New York has carve-outs that do not acknowledge all that contains the synthetic content to be advertising deception. Reported exemptions are:

- Audio-only ads.

- The AI application is purely in the language translation of a human actor.

- Some kinds of promotional content associated with expressive works (such as films and TV or video games) in the event of the use of the synthetic performer matching the expressive work itself.

Even those exemptions result in a vast universe that remains uncovered: the mundane commercial ad ecosystem in which synthetic people are selling goods, services, rentals, and brands.

Reasons Why Influencers Need to Care (Even Though They May Never Come Into Contact With AI)

To become a victim in the blast radius, influencers do not have to create a fake actor. All they have to do is be involved in a campaign where:

- The brand supplies assets.

- The creator posts them.

- And the advert gets to New Yorkers.

An influencer may believe, I am simply publishing what the brand sent. But the regulators might assume, “You are giving out an advert.” It is at that tension that friction begins.

Provided that your campaign involves an AI-generated spokesperson, a synthetic customer, or a digital brand ambassador and you post it without a disclosure, this law makes it more likely that somebody will raise uncomfortable questions. Not that influencers are fond of compliance. Since viewers enjoy declaring fake content, and the media magnify the scandal.

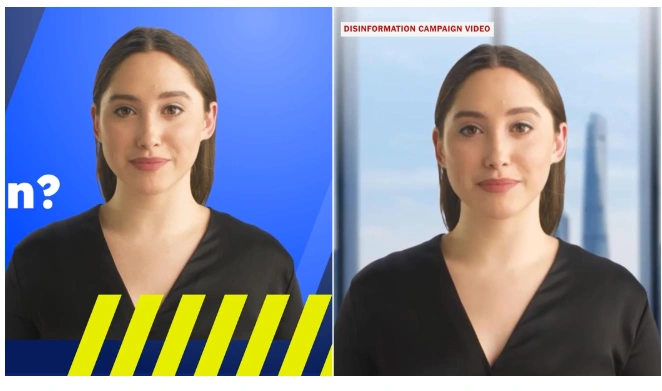

The Larger Context: Deepfake Marketing Becomes All Too Ordinary

In several years past, synthetic media scandals were the news of the day. Now, they’re Tuesday. AI presenters are faster and less expensive to the brands. Voice cloning is used to localise content by creators. Synthetic talent is tested in agencies to evade scheduling and license tediousness.

Every quarter, the technology becomes smooth, cheaper and more realistic. And that is the issue of realism. Since the moment when a synthetic actor is indistinguishable by a real one, the viewer becomes unable to make his or her judgment:

- Credibility.

- Authenticity.

- And persuasion tactics.

The law of New York practically declares it: where you are going to blur reality, you need to offer the label of the blur.

Deepfakes are becoming routine. As synthetic performers look more real, it’s harder to judge what’s authentic, so New York now pushes for clear labels. (Image Source: The New York Times)

Deepfakes are becoming routine. As synthetic performers look more real, it’s harder to judge what’s authentic, so New York now pushes for clear labels. (Image Source: The New York Times)

Marketers Also Have a Second New York Law That is of Concern

New York, together with synthetic performer disclosure law, enhances the protection of the likenesses of deceased persons. This is an expansion by the state of its post-mortem right of publicity law (Civil Rights Law SS 50-f), in a manner which impinges commercial usage of a deceased persons name, image, or likeness, even including of digitized copies, and one which is in most commercial applications, subject to consent by the relevant estate or heirs.

This is one that works right away, as the signed legislation has been covered. Then in case your campaign plan involves:

- Reuniting with a long-deceased celebrity in some nostalgic commercial.

- Narrating by the voice of a dead popular figure.

- Or a revival of a classic character as a brand character.

That is becoming a legal exposure in New York.

New York tightens rules on using deceased people’s likenesses, even digital replicas, in ads. (Image Source: Consumer Finance)

Why it Has Turned Into a Worldwide Advertising Trend (Not a New York Story Only)

New York is not a mere state. It is a cultural and business center that has disproportionate power over:

- Media.

- Finance.

- Fashion.

- Entertainment.

- And consumer protection regulations.

Other jurisdictions are on the lookout when New York makes a line. The commentary in the industry has already positioned New York as a pioneer which can repackage how brands treat synthetic people in advertisements and how compliance teams can standardise disclosure across campaigns.

In the case of global brands, the feasible answer is foreseeable: build one global rule, instead of putting checks and balances together in patches. That is how the law of New York transforms into the new standard of everyone.

The Implication This Will Have On Ad Creative in 2026: The Syndrome of the Secret Synthetic is Over

The most significant transformation is not legal. It’s creative. Over the years, the silent approach is common to synthetic performers in advertisements:

- Make it look real.

- Don’t mention it.

- Avoid questions.

- Move fast.

That generation begins to become archaic. As disclosure requirements increase, a brand has a decision to make: collaborate with disclosure as a compliance expense, or use it to your benefit of trust. The wiser option would be the second one. Since viewers are not concerned solely with misleading. They’re worried about:

- Consent.

- Exploitation.

- Manipulation.

- And identity misuse.

A disclosure of clean does not destroy a campaign. A scandal does.

The New Competitive Advantage: Transparent Synthetic Marketing

This is the twist that can be unanticipated by marketers: When properly done, disclosure can enhance performance. Synthetic content is not necessarily hated by people. They hate being tricked.

A campaign which states, in a clear and confident way: “This is a spokesperson that is made digitally.” …can give rise to interest instead of opposition. It also provides you with room to narrate a more powerful story:

- What you worked with artificial genius.

- How you did it responsibly.

- How you escaped taking advantage of the images of the real people.

- And how you save consumers perplexity.

That’s not legal compliance. That’s brand positioning.

What Might Appear as a Conspicuous Disclosure (Without Being a Kill-Kick)

You do not have to place a warning sign all over the video. This you must have something conspicuous. Conspicuous approaches that seem to be common-sense in nature include:

- Text on-screen close to the synthetic performer whenever they appear.

- An explicit verbal revelation in video (especially in the voice clones).

- Labeling platform-native as possible, supported by visible captions.

- End cards that are not too short to pass (not a blink-and-you-miss frame).

It all depends on the intention: the announcement must come out as intended to be observed, rather than intended to be concealed. (Practical note: this is not legal advice; in running major spend to the US, you should consult counsel to revise your creative and placement strategy.)

Who in Business is Rightful to This Problem?

Inconvenience has sneaked in through convenience in most firms. The social media manager employs an avatar tool. A voice model is tested by a creative team. A performance marketer enhances the most attractive video and goes on. The compliance only gets to know about it when it goes viral in the wrong way.

This law alters that dynamic since it establishes a trigger clear cut: synthetic performer in an ad – disclosure necessary. So proprietorship is soon abroad:

- Marketing leadership.

- Creative and production.

- Influencer partnerships.

- Legal and compliance.

- Media buying.

- And brand reputation teams.

The Platforms Ripple Effects (And the Reason Why Disclosure Tooling Will Be Better)

At the centre of this, platforms are placed. They distribute the ads. They host the content. The impressions make them a profit. An act that is a state rule that puts pressure on platforms because it pushes disclosure puts a strain on platforms to:

- Improve better “synthetic media” labels.

- Standardise metadata of ad libraries.

- Enhance verification and detection.

- And create less confusion about what creators need to reveal.

The further jurisdictions that are heading in this direction, the more platforms tend to simplify and expose disclosure options, as platforms like these tend to be more willing to implement product solutions over manual enforcement.

Platforms will likely respond with clearer synthetic-media labels and easier disclosure tools as more regions tighten rules. (Image Source: LinkedIn)

Platforms will likely respond with clearer synthetic-media labels and easier disclosure tools as more regions tighten rules. (Image Source: LinkedIn)

Where This Strikes the Most: High-Trust Industries

There are categories that can accept synthetic presenters without many dangers. Others can’t. Industries whose product is trust finance, health, education, political advertising, property, legal services are under closer scrutiny where there are no real people in advertisements.

Since it is not a mere artistic decision when the stakes are high, synthetic is not a creative choice. It turns into a question of credibility. Brands in these industries are likely to embrace disclosure standards sooner than they would like to be regulated, simply because they cannot afford the collapse of trust.

Deepfake Marketing and Synthetic Performers: What is the Difference?

Deepfake is a short term used in normal discourse to refer to all synthetic media. However, there is more marketing reality.

Deepfakes often imply:

- A real person’s likeness.

- Manipulated to express something or to do something.

Synthetic performers are:

- Fully invented people.

- Not always necessarily attached to a real person.

The emphasis on disclosure in New York is a mere fact this is because deception could occur even without stealing an actual identity. Even a fake spokesperson may be deceptive provided people believe that the character is actual.

The SAG-AFTRA Angle: Actors Demand Security

Creative labour groups have always expressed anxieties that synthetic people can:

- Replace paid performers.

- Weaken bargaining power.

- And enable identity misuse.

Evidence of the push of the legislation towards coverage and protections is supported by coverage notes by SAG-AFTRA as the use of synthetic performers in commercial content increases in popularity. This is important since this puts the debate of consumer deception in a different light. It’s also about consent, compensation, and control over identity. Those pressures don’t fade. They grow.

What Marketers Are Doing (A Checklist)

In case you place advertisements that are potentially seen by New York, then it is the do it now list.

- Review your existing advertisement portfolio. Look for: AI avatars, synthetic faces, AI-generated “customers”, voice clones, or fictional narrators who are pretended to be actual speakers. And paid influencer content should also be mentioned–particularly when your brand offers scripts, voiceover, or video.

- Map of where your advertisements will be distributed. In case you target the US, it is safer to assume you may be exposed in New York unless you specifically avoid it (even then, the distribution may be a mess).

- Construct a disclosure style manual. Make it consistent: wording, placement, duration, font legibility, audio disclosure requirements (where applicable). Consistency eliminates errors and accelerates approvals.

- Revise influencer briefs and contracts. Add clear requirements: where disclosure is obligatory, where it must appear, how to treat synthetic assets that are provided by the brand, who reviews before posting.

- Determine whether you should be a synthetic people brand. You having synthetic performers because it is cost-effective? Or because it can be used to back a creative idea? The response you give will define your disclosure without being defensive.

- Examine the rights and authorizations. Particularly to the “digital replica” ideas. When your campaign involves dead subjects or identifiable faces, the enhanced consent structure of New York would become effective in no time.

The Reality of Human Beings: It Does Not Matter Whether it is Fake or Not, it Matters Whether it is Hidden

This is the one that is lost in policy discussion. Viewers do not insist on the fact that all ads are pure reality. They require candidness of what they are looking at. Some revelation does not ruin the magic. It sets the terms.

It tells your audience: We are employing an artificial actor–and we are not making a fool of you.

In effect, that sentence may be the difference between: Great campaign, tech-savvy and “This brand is lying to me.”

The Implication of This on Agencies And Creators in Australia

When you are in working Australia, you will fancifully make New York distant. Digital advertising is not distressed about distance. When you have global content, you need to have global compliance. This is especially true for:

- Creators with an international skewed audience.

- E-commerce brands with shipping to the US.

- US based SaaS companies.

- Agencies with programmatic campaigns on international clients.

- And entertainment marketing forcing content into US markets.

Should your campaign be in a position to reach New York, you must take the following operational position; it is the safest; design disclosure in one place, everywhere. It is less expensive than throwing dice to see where your advertisements fall, and it shields you against the upcoming round of such bills.

Copycat Laws (And Sooner Than You Think) to Come

The legislation of New York comes in the midst of some more general discussions of state regulation of AI, and is being reported as such, as part of an emerging trend to regulate synthetic media and identity. Although the other jurisdictions may not necessarily use the same model, the trend is evident: more disclosure, more consent, more accountability.

In the case of SEO, that is not a news hook. It is a long-tail keyword gold mine:

- “The requirements of AI disclosure in advertising”

- “Synthetic performer label rules”

- “Deepfake marketing compliance”

- “AI avatar ads disclosure”

Such questions will only expand as law offices, creators, and brands are competing to figure out what is evolving in every-day practice.

Copycat laws are coming fast; expect more disclosure, consent, and accountability around synthetic media. (Image Source: Route Fifty)

Copycat laws are coming fast; expect more disclosure, consent, and accountability around synthetic media. (Image Source: Route Fifty)

The Other Frontier: Evidence of Person in Advertising

This is an idea that is already seeping into industrial discourse, and which will become increasingly common over the next several years: When non-humans are able to sell anything, consumers will begin to demand evidence that the individual who is selling a product is real, or that they are at least coming out correctly even though not.

That can lead to:

- Authenticated actuarial registries.

- Reward genius on the platform.

- Watermarking standards.

- And ad library metadata indicating synthetic performers.

The legislation of New York does not require all that. But it pushes the market towards it. When the normal level of disclosure is achieved, the next issue is verification: Alright, you have revealed that it is synthetic–prove to me today that you did it in a responsible manner.

A Tale Marketers Will Realise: The Day Synthetic is No Longer a Hack

Imagine a brand team that is in a hurry and that has a dismal deadline. A launch is tomorrow. The paid actor cancels. There is still a need of a hero video of the product. One of them says, “We can create a spokesperson within an hour.” And they’re right.

The video looks polished. The voice sounds natural. No one not in the group realizes that it is synthetic. Until the comments roll in.

“Is this person real?” “Why won’t they disclose it?” “This feels dodgy.”

The statute of New York is constructed in good time. It makes it a compliance choice to make the last-minute hack. And it pushes teams to a more preferable behavior: disclose early, disclose clearly, and continue moving.

The Bottom Line

The synthetic performer disclosure law of New York is not an imaginary policy. It appeals to a current and actual behaviour in advertising: the utilization of human-like synthetic performers without informing the audience.

The law:

- Forces visible disclosure of advertisements accompanied by artificial performers.

- Takes effect on 9 June 2026.

- Is applied to advertisements given to even non-state audiences distributed in New York.

- And has civil penalties of 1000 then 5000.

In tandem with it, New York is the first US state to increase regulations regarding business usage of images and digital versions of dead persons as well–hurling the consent and identity rights to the centre of 21st-century marketing.

As a brand, agency or creator, it is not the wisest thing to do to oppose disclosure. It’s to own it. Since in 2026, there is no tagline called trust. It’s a strategy.