Lead: At Qualcomm’s Snapdragon Summit (23-25 September 2025), CEO Cristiano Amon sketched out a future vision for personal computing beyond the one-screen smartphone and to always-on, device-extending personal agents. They are on watches, earbuds, and PCs and phones — learning about you, helping in the moment, and storing more on-device. More of a new playbook for computing, less of a chip launch. (Qualcomm)

At the Sept 2025 Snapdragon Summit, Qualcomm CEO Cristiano Amon revealed plans for always-on personal AI agents across phones, watches, and earbuds (Image Source: Answer4u)

Why it matters now

Newest Snapdragon platforms — and the firm’s move in the ecosystem — propel raw silicon advances, but their breakthrough potential is more intelligent on-device work. Advancements in Qualcomm’s neural processing stack and more extensive system integration allow devices to run richer, individualized models without incessant cloud roundtrips. The payoff: faster response times, lower latency, and a better privacy footing for daily assistants. (The Verge)

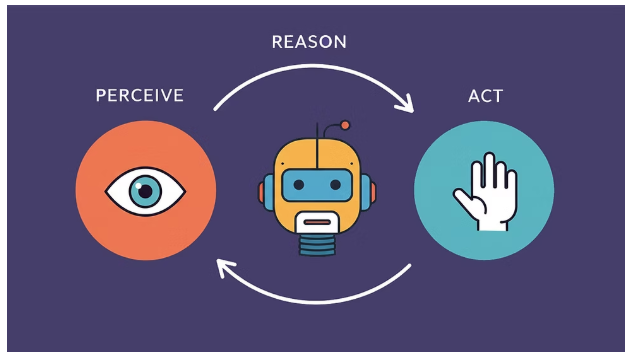

The pivot: phone-first to agent-first

The phone has been the single productivity and communications hub for decades. Qualcomm’s Summit rearranges that hub: the user — and his or her agent — now occupies center stage. The interface rearranges itself to whatever device you happen to be wearing or carrying around. In real-world terms, that will translate into assistants that live on multiple devices and behave proactively, rather than reactively. (Constellation Research Inc.)

&

The DI Phone turns into your Agent Hub:

One-click deploy & manage AI agents

Follow strategies (Trading, Asset managementt, Social AI)

Get alerts & actions instantlyEveryone can have their own AI companion, unique and personal to their journey. pic.twitter.com/1yoxlsQ2Ij

— DI (Decentralized Intelligence) (@Didotxyz_) September 25, 2025

How the agents will appear — and what they’ll do

Seek agents to appear on a minimal number of devices:

- Watches and wearables. Quick choices, health reminders, and stealthy nudges that run locally.

- Headsets and earbuds. Real-time translation, quiet summary whispers, and noise-sensitive alerting.

- Glasses and AR hardware. Real-time context cues and guide prompts as you move through life.

- PCs and laptops. Bigger personal models for productivity — but personal aids for local files and local tasks.

These agents are human-like: they automate, summarize, and recommend — and they get all this done with much lower latency because much of the heavy lifting is done on-device. Qualcomm asserted this hybrid edge-plus-cloud architecture as the likely future direction. (Qualcomm)

Qualcomm envisions AI agents on wearables, earbuds, glasses, and PCs — offering quick help, guidance, and productivity with low-latency edge-plus-cloud power (Image Source: MDPI)

Hardware that supports the agency

The highlights are faster CPUs and improved GPUs. The quieter revolution is within the neural processing stack. Qualcomm’s Hexagon NPU and media pipes now handle more inference and more advanced sensor fusion, enabling small language models and bespoke classifiers to be run locally and always. That blend turns streams of sensor data into instant, contextual action without a round-trip to a distant server. (The Verge)

Privacy and local-first promise

Recurring throughout the Summit is “privacy by design.” Execution on-device keeps raw sensor data from leaving your device. On-device models curtail cloud dependency and make it possible for personal models to train on-device, sending only distilled updates when needed. Partnerships between chipset makers and small-model vendors already are providing private, personalized agents. (personal.ai)

Not everything is private by default

The hardware enables in-device processing, though some companies and OEMs will ask to collect anonymised telemetry or sensor-based signals so that they can be trained. Early reports of the Summit provide additional insight that future Snapdragon platforms could make additional device signals available for model updates in the future, and present a straightforward trade-off: more advanced, adaptive agents or more stringent management over malicious telemetry. Consumers and regulators will be watching closely. (TechRadar)

A new frontier for ecosystems

The battle next is not merely raw GHz. It’s who builds the persistent agent layer: silicon companies, OS vendors, or the cloud giants. Qualcomm has placed Snapdragon as the substrate of the agent economy. Apple enjoys tight hardware-software integration; Google will try to provide agent features across Android and PC form factors; Intel and others will retaliate in PCs and enterprise markets. Developers will bet based on the reach, maturity, and credibility of the toolchain. (The Verge)

Every AI agent demo you’ve seen is basically fraud.

Google just dropped their internal agent playbook and exposed how broken the entire space is.

That “autonomous AI employee” your startup demoed last week? It’s three ChatGPT calls wrapped in marketing copy. Google’s real… pic.twitter.com/4OoDlhmvlV

— Millie Marconi (@Yesterday_work_) September 26, 2025

Deep dive — streamlined

Agents of today combine five elements: sensors, tiny local models, a short-term context store, action routines, and selective cloud sync. That hybrid divide makes everyday tasks quick and intimate; the cloud only helps with compute work when necessary. Qualcomm’s Summit highlighted the hybrid approach and optimizations supporting it, particularly for Hexagon NPU and device runtimes. (Qualcomm)

Small models, big payoffs

You don’t need elephant-sized models to create useful assistants. Small language models (SLMs) and domain classifiers perform most mundane functions — summarizing messages, reading receipts, and handling calendar interactions. Models of this type sit in the task size of modern NPUs, run in repetitive loops at moderate energy cost, and demonstrate privacy-first design. SLM deployment partnerships on Snapdragon devices already exist. (personal.ai)

A day in the life — short vignettes

Maya, a journalist. Her watch quietly reminds her of a quote; earbuds capture, transcribe, and condense an interview on site for her notebook; her laptop authors the opening paragraph of an article from local storage — and nothing leaves the device without permission.

Tunde, a shop owner. His laptop agent parses invoice details from receipts taken on the phone, prints bills, and flags suspicious bank transactions while holding customer data on business machines until he approves a sync.

These all show simple, real-life ways in which agents are being brought into daily workflows.

really good post from @ay_o on actually deploying agentic workflows when it comes to healthcare billing – but I thought this point here in particular was poignant

There are lots of interfaces where information is presented – machine-readable interfaces, human readable… pic.twitter.com/LWi7lGmL6h

— Nikhil Krishnan (@nikillinit) September 22, 2025

Developer basics — four quick guidelines

- Performance profile for energy and memory, not merely latency.

- Make use of quantised models and vendor SDKs for NPUs.

- Offer users simple, reversible control over data sharing.

- Authenticate and sign model updates via crypto attestations.

Ethics, bias, and accessibility

Agents reflect the information they are presented with. Designers must bake in cautious action in high-risk zones, provide explainability for suggestions, and enable users to rapidly rectify mistakes. For accessibility, real-time audio descriptions, summarization, and voice-first controls can be transformative — if models are trained on representative, diverse data.

Business models re-imagined

Distributed agents redistribute the pay streams. Expect top-end privacy levels, vertical business agents for law and healthcare, edge compute services, and device+service bundles. These models work for vendors who bundle stable hardware with big, user-controlled services.

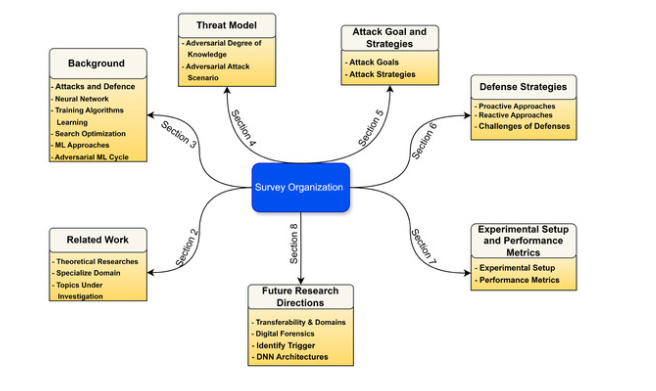

Security: new vectors, new defences

Local models counter some risks but pose others. Model stealing is attempted by attackers, poisoning local updates, or adversarial inputs. Signed updates and hardware-backed enclaves, and secure attestations are more critical than ever before — particularly where agents handle credential or signing duties.

Local AI boosts security but brings new risks like model theft and poisoned updates. Qualcomm points to signed updates, secure enclaves, and attestations as key defences (Image Source: MDPI)

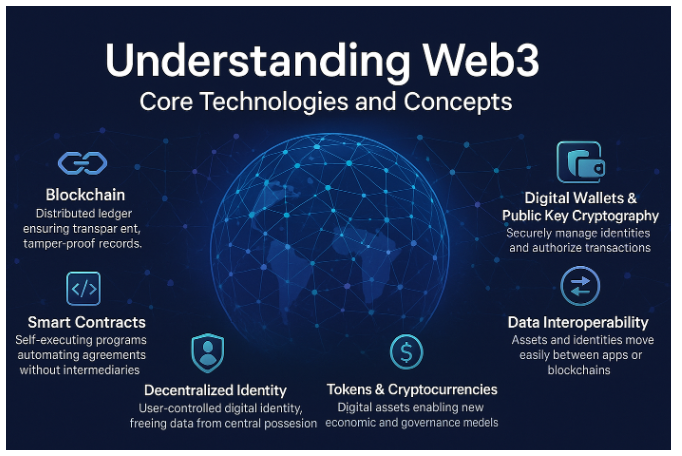

What this means for crypto and Web3

For users of crypto, local agentic compute simplifies private signing streams. A watch or secure enclave may check transactions with minimal exposure, and agents may locally scan wallets to expose outliers. Secure wallet-agent integration needs standards, transparent consent, and audited interfaces so that agents never substitute transparent user consent.

Local AI agents can secure crypto transactions and wallets — but only with strict standards, consent, and audits. (Image Source: Digital Currency Traders)

Regulation and legal risk

Local-first designs simplify some compliance, but ad-hoc cloud-based training and cross-device sync cause jurisdictional confusion. Regulators will demand audit logs, user delete capability on personal models, and telemetry transparency. Organizations must map data flows, normalize consent, and expect audits.

Five bullish predictions

- Flagship phones and premium wearables provide agent capabilities in 12 months.

- On-device SLMs are the everyday workhorse for everyday tasks. (personal.ai)

- Privacy-first subscriptions and edge services will be here.

- Secure model update industry standards will become a reality.

- Early monetisation is driven by vertical, enterprise agents (legal, medical, finance).

What’s next to watch

To monitor for this shift, watch for three things: what products release with on-device agent capability; whether OEMs enable opt-in telemetry for model improvement; and how quickly platform partners deliver developer solutions for on-device models. Early product releases and developer adoption will dictate whether the vision becomes possible in a day-to-day reality. (The Verge)

Frequently Asked Questions

What is a personal agent?

A persistent, personalized assistant that serves on your devices, learns your habits, and can act on your behalf in advance.

Will agents replace smartphones?

No. Smartphones are still at the center of most things. Agents transform how devices engage — the phone is one of many interfaces.

Is local processing really private?

Local processing reduces exposure, but vendors may request anonymised telemetry. Check vendor policies and settings.

Will agents drain the battery faster?

Agents use power, but new NPUs and runtimes optimised for energy achieve compute-energy balance; sparse, event-driven models are used in most agent operations.

How do agents affect crypto security?

Agents can improve UX using hardware enclaves for signing, but wallet integrations require explicit consent and safe interfaces.

The business question: who and how?

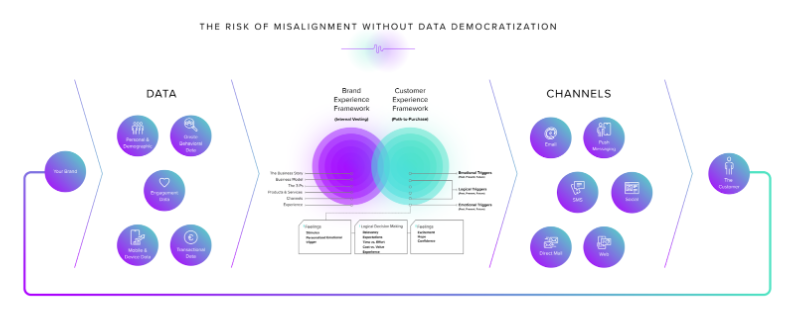

The business conflict boiled down is simple. Agents need to be constantly invested in (models, data, cloud sync, UX). But users expect privacy, low friction, and value. That tension gives rise to a variety of likely revenue streams.

First, device+service bundles. Flagship devices — phones, headphones, watches — will all include a minimum-level local agent. Vendors will offer a paid level for premium agent features: cross-device knowledge syncing, more advanced personalized skills, desktop integrations, and vertical templates for law, health, or finance. Qualcomm and OEM partners already highlight the agent story considerably for flagship processors and next-generation handsets, meaning OEMs will bundle hardware and services.

Second, verticalisation of the business. Law firms, clinics, and financial planners require specialist agents who know domain regulations and compliance. Private, audited agent deployments running on client hardware primarily, but leveraging secure cloud tooling for the heavy lifting are funded by firms. Tailor-made licensing for regulated markets — and quicker monetisation here than for generic consumer subs — should be sought out. Forbes’ article on Snapdragon’s PC and compute plans means that the stack will be sold to enterprise buyers by chip companies. (Forbes)

AI agents will come bundled on devices, with paid upgrades for advanced, cross-device, and specialised features. (Image Source: RocketSource)

Third edge compute services. Few businesses want to wrestle with NPUs or model signing. Platform and cloud vendors will offer “edge agent orchestration” — hosted solutions that distribute signed updates, run big training bursts in the cloud, and provide telemetry dashboards to vetted partners. That creates repeat revenue while respecting end-user privacy by default (if implemented well). MobileWorldLive and industry reporting suggest OEMs and partners are already developing this hybrid model. (Mobile World Live)

And finally, data cooperatives and opt-in model enhancement. Vendors can ask users to provide condensed model updates or encrypted gradients in exchange for features or savings. That’s hard to do, but worth it economically, if the vendor is trusted and the value proposition is believable.

Developers — a working playbook

Shipping high-value agents is greater than “putting a little model on a chip.” It requires architecture, UX design, and operational discipline.

- Power design, not speed. Battery life matters to wearables. Quantify drain and jank, not latency. Quantise aggressively; use sparse attention or retrieval-augmented pipelines for tasks at hand. Qualcomm’s Summit emphasized NPU advantages that allow for frequent, local inference — but efficient models still matter. (The Verge)

- Split the computation between privacy and cost. Keep short-term context and user data on-device. Relocate heavy retrieval or retraining to the cloud. That hybrid split enables good UX with minimal data exfiltration.

- Take advantage of vendor SDKs and hardware features. NPUs like Hexagon are far more efficient when developers take advantage of vendor toolchains and quantisation pipelines. Qualcomm and its ecosystem partners are making available on-device model SDKs and runtimes; adopt them early for performance improvements. (RCR Wireless News)

- Consent must be reversible and fine-grained. The user must have control over the knowledge graph, delete memories, and audit the actions of the agents. Provide easy flows for “forget this day” and “suspend personal learning” — they become levers of trust.

- Signed updates and attestation. Agents are updated with models. Sign each model, verify signatures in the hardware root of trust, and update the log locally. That makes supply-chain or poisoned-model attacks not propagate.

- Cross-device context API. Define a small, secure format for what context is exchanged between devices: conversation pieces, task state, and file references. Make the format small and human-readable so client and server code remains debuggable.

- Testing and human intervention. Agents will run autonomously; you want robust scenario tests (legal, financial, medical requests) and a manual override UI. Put humans in the loop for early deployments.

These are not theory points. Industry reporting shows Qualcomm and co. focusing heavily on on-device runtimes and developer toolchains to support these experiences. (Neowin)

Standards, portability, and risk of siloed agents

A helper available only on one vendor’s hardware is a siloed helper. That harms users and strangulates developer ecosystems.

We need only a small number of shared standards:

- Model update signatures (interop for verification).

- Context portability (portable short-term stores and knowledge graph exports).

- Privacy signalling (a user-level manifest of the capabilities of local models).

Standards bodies, industry consortia, or big platform players will provide alternative formats. Make a choice on whether platform partners use open schemas or vendor-locked toolchains — the choice makes developer economics for the next couple of years. Constellation and TechInsights analysis placed Qualcomm’s vision in a hybrid cloud-edge future, so standardisation wars are imminent. (Constellation Research Inc.)

Regulation — where law interferes with the vision

Privacy-first hardware is a boon, but the law still speaks.

- Data subject rights and models. Regulators may view a personal model with individual user data as a form of personal data. Users must be able to exercise the right of access, port, and delete their models. The privacy regulators will most likely insist on portability and deletion requirements.

- Accountability for the agent’s actions. As agents travel on fiscal, medical, or legal matters, regulators will seek out who can be held accountable for errors — developer, device maker, or service company. Clear contracts and liability frameworks will be the way forward.

- Cross-border syncing and jurisdiction. As devices sync information across borders, organisations will have to track where model changes and condensed telemetry travel. This creates compliance difficulties for vendors that operate globally.

- Auditable telemetry. Regulators will require secure, verifiable telemetry channels — metadata that attests a model update meets audit law without exposing raw user data.

RCR industry report and analysis already attest to regulators’ focus on edge data and Qualcomm’s hybrid cloud approach announced at the Summit. (RCR Wireless News)

The UK’s ICO just dropped its detailed guidance on DLT/Blockchain, and it’s getting into the weeds of the biggest conflict in tech law: decentralization vs. data privacy.

The guidance moves beyond theory and gives us some concrete (and debatable!) positions:

What’s Personal… pic.twitter.com/qcYILr9KaY

— Blockpass (@BlockpassOrg) September 26, 2025

Security — new attack surfaces, new defenses

On-device agents reduce exposure to the cloud, but create new threats.

- Model theft and IP extraction. Proprietary models may be stolen by attackers with local access. Hardware enclaves and encrypted model stores are relevant.

- Poisoned updates. Signed updates and zero-trust pipelines counter this.

- Adversarial inputs. Real-world sensors (audio, camera) generate adversarial vectors; error rates are minimized by robust pre-processing and sanity checks.

- Credential abuse. Agents that authorize transactions (crypto, bank transfer) should require a second factor or user consent for high-risk actions.

Qualcomm and partners emphasize secure enclaves and attestation as the hardware story; devs will need to take a dependency on those capabilities. (Qualcomm)

Crypto and Web3: a high-value use case

Users of crypto will love agentic devices for one simple reason: better UX for secure signing.

Imagine a hardware-backed agent on your wrist that detects an authentic transaction request from your wallet app, verifies the origin of the dApp, presents a human-readable abstract, and persists keys in an isolated secure enclave. That process removes phishing threats and makes it more user-friendly for mass consumers.

But beware: any agent employed to automate signing is a systemic risk if one designs bad consent models. Standards to the rescue: clear permission for transaction types, signed attestations for transaction contexts, and user audits recording what an agent has signed.

Industry commentary suggests Snapdragon’s emphasis on edge may enable these flows, but secure wallet-agent design remains a product and standards problem. (CGMagazine)

UX and human layer — what the actual user interface will be

Agents must be useful but not creepy. That requires three UX imperatives:

- Predictable personality. Agents must operate out of a well-defined persona — useful, risk-averse on high-risk actions, and transparent about uncertainty.

- Explainability at the time. When a recommendation comes to take an action, it should show a short rationale and enable drilling down. “I took this from your meeting notes” is preferable to mystery.

- Simple governance. Stopping learning, erasing memory, and exporting data controls must be visible and accessible. These controls become trusted currency.

Good UX reduces churn and maximizes opt-in to value services. Qualcomm details agent coordination between devices; design will decide whether people feel that magic or think it is intrusive. (YouTube)

AI agents must be clear, transparent, and easy to control; trust in UX will decide adoption. (Image Source: UX Tigers)

Adoption timeline — realistic pace

We overestimate near-term change and underestimate long-term transition. A realistic pace is as follows:

- 0–12 months: Flagship wearables and premium phones move agent capabilities on-device forward; the first wave of devices is from Xiaomi, OnePlus, iQOO, and partners who have debuted at the Summit. Premium service presents and first consumer trials commence. (The Verge)

- 12–24 months: Developer tooling and SDKs mature; more apps ship local agents or agent companions. Vertical agents for managed use-cases proven by companies.

- 24–48 months: Model standards begin to form, cross-device portability rises, and enterprise and subscription business models come together. More specific regulatory guidance on model portability and auditable-ness.

That’s also how previous platform shifts occurred: hardware availability inspires developer development, driving consumer usage and regulation pushback.

Five harsh risks that would retard everything

- Privacy scandals. Sellers of agents that embed nosy telemetry will witness trust dry up quickly.

- Battery-draining experiences. Terrible wearables performance halts adoption.

- Proprietary lock-in. Agent silos in stacks suppress third-party innovation.

- Surprise regulations. Unexpected new limitations on model storage or cross-border syncing may slow rollouts.

- Security breaches. One supply-chain poisoning or bulk key theft would undo years of progress.

It’s preferable to shore up these threats up front than to iterate on building performance.

Easy recommendations for every stakeholder

- For OEMs: Provide privacy-respecting defaults that individuals can opt into, and provide users with straightforward, reversible controls. Partner with mature enterprise principals for vertical agents.

- For developers: Optimize for edge and energy runtimes; build transparent consent flows; accept signed update cadences.

- For regulators: Opt for auditable metadata and user control over model deletion/portability to dull bans.

- For consumers: Demand open controls; purchase devices that inform you about what their agent learns and allow you to erase memories.

Also Read: Tether Seeks $20 Billion Private Placement at $500 Billion Valuation

Detailed FAQ (practical, targeted)

Q: Will these agents communicate with one another across vendors?

A: Not in the near future. Interoperability requires common schemas and signed context formats. Look for early vendor-specific experiences followed by phased migrations to standard formats.

Q: Is it possible to erase my device’s personal model?

A: Sellers will implement and use deletion and reset controls. Regulators will promote user rights in models with personal data.

Q: Are these agents always connected?

A: No. Local models are the concept of offline and low-latency capability. They will use the cloud selectively for compute-intensive tasks.

Q: Will wearables improve agent tasks or phones only?

A: Wearables are well-suited for short, contextual activities — reminders, confirmations, audio recaps. Developers will need to design for short attention and limited power.

Q: How will malicious agents be regulated?

A: Search for attestation, signed updates, and platform policies combined. If there is a device that supports unsigned model updates, the platforms must remove it.

Q: When should I anticipate seeing agent capabilities on my next phone?

A: Launching today and available in the next product cycle are some showpiece devices with the new Snapdragon 8 Elite Gen 5. The early adopters will receive features later this year and next.

Last, hard-headed consideration

The protests at the Snapdragon Summit are making an important observation: hardware dictates what the private, personalized assistants can do in a day-to-day. That requires the transition away from “cloud by default” to “hybrid by design.” The payoff? Snappier, more personalized assistants — but only if industry, regulators, and developers get consent, standards, and security right.

The coming couple of product cycles will determine if agents become a sneaky optional feature of machines or the beginning of a completely new era of computing. Either is historic; this time, hardware appears to be with us.