Google DeepMind now provides robots with an additional layer of thought. Two new systems, Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, enable robots to plan multi-step tasks, decide what to do, and then carry out motor actions with visual perception. The system can be employed to teach a robot how to sort laundry by color, pack a suitcase based on local weather restrictions, or sort recyclables by analyzing local regulations, tasks involving planning, judgment, and dexterity. (Google DeepMind)

DeepMind’s Gemini Robotics lets robots plan, decide, and act on complex tasks. (Image Source: The Indian Express)

Why It’s Important Now

GBots have long done simple commands, pick up that cup, press that button. The new robots enable a machine to halt, come up with a plan, search for missing rules or data on the web, and then execute. That shift, from simple executor to short planner that uses both vision and language, is why now is important. DeepMind places Gemini Robotics-ER as an “embodied reasoning” partner that takes advantage of tools and searching in order to inform action, while Gemini Robotics 1.5 does the actual motor planning. (Google Developers Blog)

For the first time, household tasks serve as a convenient demonstration: folding a shirt up or sorting out socks isn’t nothing. That involves a combination of vision, judgement, and movement. The new pile demonstrates that it is becoming feasible to mix such combinations.

How The Two Models Coexist Is An Analogy

Imagine Gemini Robotics-ER as the planner and the phonebook: it gazes at the target, breaks it down into steps, and, where it needs local knowledge, consults outside sources. Gemini Robotics 1.5 is the hands and eyes: it reads from the scene, carries out the plan, and translates steps into exact motor movements.

That gap is significant. It enables one reasoning system to produce a plan that can be run by a different robot with other arms or hands, so skills are transferable across hardware platforms. DeepMind shows cross-platform learning across a number of robots, which means the rollout of useful skills in less time. (Google DeepMind)

We’re making robots more capable than ever in the physical world.

Gemini Robotics 1.5 is a levelled up agentic system that can reason better, plan ahead, use digital tools such as @Google Search, interact with humans and much more. Here’s how it works pic.twitter.com/JM753eHBzk

— Google DeepMind (@GoogleDeepMind) September 25, 2025

Lab Hands: Laundry, Recycling, And Packaging

DeepMind uses everyday examples for an end. They are comforting and, to the surface, they bring real problems:

- Sorting laundry by color is simple. But it involves sustained visual sorting under fluctuating light, early planning to place items together, and deft movements to move and heap up clothing without tangles. Gemini Robotics 1.5 devises and executes those tiny repeated movements while embodied reasoning checks the plan for the task. (Financial Times)

- Bagging a bag to local circumstances is knowledge beyond the self — what is Melbourne anticipating this weekend? Embodied reasoning can query relevant authorities, factor that information into the packing equation, then send instructions to the action model. That web-smart behavior already shows up in demos and documentation. (The Verge)

- Sorting recyclables causes a system to have to deal with regional differences: what’s recyclable in one council can’t be recycled in another. A machine that can search regional laws before acting reduces errors and chooses the right bin. That plant converts robot usefulness from a single, localized job to a context-sensitive assistant. (The Verge)

The Technical Idea In Plain Terms

- See and report: The robot looks; the VLA (vision-language-action) model produces an ordered report of what is seen.

- Plan a plan: The embodied reasoning model converts the objective into a sequence of tasks, failure modes, fallback options, and looks up further facts if needed.

- Due to the letter, the execution model converts steps in the plan into motor commands matched to the robot’s joints, grippers, and sensors.

- Learn across bodies: Where reasoning is abstract, one plan can direct many types of robots, and the execution model can be tuned to each body. (Google DeepMind)

That pipeline explains what humans intend when they say that the robots “think then act”, it’s planned reasoning, not pure reaction.

Realistic Strengths And Reasonable Caveats

Strengths:

- Context sensitivity: The embodied reasoning layer is able to access task-specific facts. That means real-world tasks where rules apply. (Google Developers Blog)

- Skill transfer: The planning-execution separation enables groups of robots to share learned behaviour across diverse robot hardware. (Google DeepMind)

- Rich perception: The execution model combines vision with language conditioning, enabling robots to learn to cope with messy, real scenes. (Google DeepMind)

Caveats

- Dexterity limitations: Fine motor manipulation (folding folders, unclasping a bra strap, thread-inking elastic) continues to challenge actuators and control code. The reasoning layer can plan, but hands will still need to be capable.

- Safety and edge cases: If robots consult external information, they may be presented with contradictory or spurious rules. Designers must implement conservative backup paths.

- Cost and rollout: New sensors and models make things more expensive. For now, potential adopters are labs and rich robotics firms, not strip mall laundromats.

- Generalisation vs robustness: Demos work well, but real houses are messy. The distance from the demo table to the rowdy family home is huge.

DeepMind’s robots excel in context and skill sharing but face hurdles in dexterity, safety, cost, and real use. (Image Source: Dr Rajiv Desai)

Why Companies And Crypto Folks Ought To Care (Yes, Including Crypto)

You might be a crypto expert with the question: What is laundry doing with tokens and ledgers? Plenty, as it turns out.

- Edge compute + decentralised services: Web-searching bots, policy retrieval, or querying paid knowledge may depend on distributed or cloud services. That necessitates verifiable, secure data feeds, something where oracles and decentralised compute may enter the picture.

- Data markets and skill marketplaces: Think tokenised marketplaces where a skill learnt by a robot (e.g., “folding shirts v1”) is traded with other parties. Provenance and licensing are a natural application for blockchain primitives.

- Robots as assets: High-value robotic workflows could take the form of tokenised services, buy a week of robot cleaning from a provider who verifies uptime and performance on-chain.

- On-chain regulation and automation: When robots verify against rules that affect billing or safety, immutable logs and auditable trails matter again, a terrain well-traveled by crypto builders.

Whether you build DeFi infrastructure, oracle networks, or on-chain verification, these robotics stacks change what kind of services people are going to want, and that creates business models. The convergence is actual, not academic.

Early Industry Pushes And Access

DeepMind will roll out these abilities with caution. The company publishes the models and shows them off; some abilities are published to partners and through developer channels, and wider availability comes after thoughtful testing and safety review. The gradual access reflects the real risks of empowering machines to operate in the world. (Google DeepMind)

Already, developer commentary and press accounts describe hybrid deployment trends: on-device processing for latency-sensitive tasks, cloud for compute-heavy reasoning, and secure APIs so developers can experiment with new abilities. This hybrid approach enables responsiveness, privacy, and capability balance.

Brief Human Anecdotes (Why A Thinking Robot Is Important)

Consider a working mom with two jobs. She comes back home with a laundry basket and a head full of chores. A robot that can sort laundry and recommend which load to wash first is no fantasy of science fiction; it’s a little but actual relief in daily life. Or picture a small recycling business that requires affordable automation to separate commingled trash: a context-aware robotic arm that adapts to local regulations could release a new level of throughput.

Those small, human-sized wins make the technology useful in a way that big demos (humanoid running, for example) never can.

A laundry-sorting bot or smart recycler shows real everyday value beyond flashy demos. (Image Source: CNET)

Deeper Technical Overview, What’s In The Two-part Structure

Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 split work into building robots useful in the world. One system handles perception and motor control; the other handles spatial reasoning, planning, and tool use. Together, they create a short planning loop: perceive → plan → act → check. That loop is the true innovation: it makes it possible for a machine to be halted, query context, and then carry out a sequence of movements with a feeling of success or failure.

The embodied-reasoning engine (ER) reasons about space, sequence, and contingencies. It takes a broad command, “pack for a weekend in Melbourne,” and turns it into an explicit plan: what to take, the number of layers, where things go, and fallback actions in case something’s missing. The key thing about ER is that it can invoke external tools, web search, or a bespoke API to retrieve facts that influence the plan. It’s that reach-out-for-context capability that makes simple scripts into useful assistants.

The scene-perception and correct motion-oriented vision-language-action (VLA) model inspects the scene, identifies objects, predicts grasps and trajectories, and translates plan steps to joint-level commands. Because it is trained on multiple robot bodies, it can transfer motion “skills” to another system and needs less retraining. That motion-transfer idea lowers new hardware deployment time and data cost. (Google Cloud Storage)

Training marries demonstrations, simulated environments, and web-sized multimodal data. The team uses a blend of human demonstrations and synthetic trajectories to teach both reasoning and subtle manipulation. In practice, that means that a new task can look useful with a few dozen demonstrations and then follow-on in-context adaptation. The result is quite far from ideal human dexterity, but it is good enough to do many household and industrial subtasks reliably. (Google Cloud Storage)

Gemini Robotics 1.5 from @GoogleDeepMind marks the official introduction of agentic capabilities to robots, allowing them to complete complex, multi-step tasks.

But… what does that mean?

Before, robots were able to accomplish a single task, like picking up fruit or zipping… pic.twitter.com/pgmNO3gCUq

— Google AI (@GoogleAI) September 25, 2025

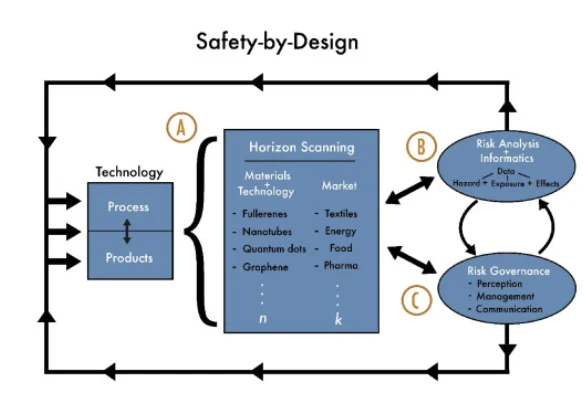

Safety Architecture And Governance Decisions Get Narrowed Down

Designers approach these systems as tools that need to fail safely. That doctrine manifests in three practical layers.

First, cautious action wraps. Robots operate under stringent safety limits, reduced approach speeds, restrained force, and fallback stops. That decreases the likelihood of injury even when the plan fails.

Secondly, human-in-the-loop verification. For dangerous tasks, cutting, moving heavy weights, or collaborating with humans, the system detects the step and seeks human confirmation or monitors remotely.

Third, sandboxed web queries and tool use. When ER calls an external reference (e.g., local recycling rules), the system calls vetted, auditable connectors. Teams monitor queries and responses to provide a traceable path of decisions for compliance and debugging. DeepMind is releasing these models with phased previews and tested partner programs to get governance in place before widespread deployment. (Google Developers Blog)

A final safety remark: latency or privacy-sensitive on-device versions do exist. When web calls are hazardous or unwanted, a truncated on-device version can run the execution stack locally and fall back to human surveillance when unsure. That hybrid approach maximizes capability with control. (Google DeepMind)

Robots use safety limits, human checks, and secure web queries, with phased rollouts and on-device options for control. (Image Source: ScienceDirect.com)

Economic And Workforce Implications: Practical, Real-time Ramifications

These technologies change who pays for automation and how services expand.

In warehouses and recycling plants, a single vision-guided arm, which can change to fit varied scraps, saves onboarding time and downtime. For small businesses, that lowers the cost of automation: you do not need expensive custom-built robots for every operation.

Convenience is what the early adopters encounter in their homes: drudgery that eats into time, sorting, cleaning, and simple food preparation, is now outsourced work. Not overnight mass unemployment but a shift in labor towards installation, monitoring, maintenance, tailoring, and higher-value human work. New professions arise: robot trainers, safety inspectors, localisation engineers, and subscription service operators. (Le Monde.fr)

For crypto developers, the shift is a challenge. Decentralized ledgers and oracles can offer verifiable feeds to a robot that needs trusted local information. Tokenized marketplaces can keep track of provenance records for moved “skills”. And on-chain contracts can settle payment for service tied to performance metrics — e.g., paying a robotic cleaning company after an on-chain proof of task fulfillment. The pairing is utilitarian: robots need trusted inputs, secure audits, and new marketplace mechanisms.

A No-nonsense Pilot Checklist For Business Piloting A Pilot

If you’re piloting, substitute a little high-risk product launch for it. Here’s a tight checklist.

- Define a limited scope. Choose a single, well-contained task: sorting a single waste type, folding a small group of clothes, or building a standard kit.

- Pick hardware early. Identify grippers, cameras, and safety cages. Map the model’s known supported platforms wherever possible.

- Establish safety KPIs. Force limits, human-override latency, and no-injury targets must be included.

- Make prepared data feeds. If the robot calls on local rules or weather, provide trusted APIs and an audit trail.

- Schedule human oversight. Pilot runs must include shifts for human monitors.

- Budget for integration. A significant amount of cost is likely to lie in mounting, cabling, edge computing, and maintenance.

- Measure ROI sensibly. Measure uptime, error rates, rework, and handover time not throughput.

- Speak to users. Early adopters need clear expectations, opt-out options, and privacy guarantees.

By doing this, surprises are reduced and sane adoption accelerates. Developer access and APIs are already available for approved partners in the platforms’ preview programmes and APIs. (Google Developers Blog)

Also Read: The Great Crypto Unravelling: How a $160 Billion House of Cards Fell Apart

In-Depth FAQ Concise Responses For Busy Readers

Q: Where Can I Try These Systems Today?

A: ER is made available by DeepMind as preview and developer APIs through Google AI Studio; complete deployment is done after staged partner testing.

Q: Do Robots Learn By Watching People?

A: Yes, demonstrations are included within the training set. The models also utilize simulation and in-context learning to learn new tasks quickly.

Q: Will They Work In Messy Homes?

A: They work best in semi-structured spaces to start with. Families with clean surfaces and regularly located objects see the fastest wins; very messy rooms take more engineering and surveillance.

Q: How Expensive Is Deployment?

A: Hardware, safety infrastructure, and integration drive costs. Pilots’ budgets will probably reflect hardware plus systems integration and monitoring for months rather than plug-and-play prices.

Q: What About Privacy When Robots Search The Internet?

A: Teams use sandboxed, audited connectors. Organisations should restrict external access, log queries, and store data retention policies before deployment.

Q: Will Blockchain Help?

A: Yes. Oracles, verifiable logs, tokenised skill marketplaces, and outcome-based payments all align well with the services robots need. That doesn’t mean all deployments will need on-chain technology, but web3 builders should pay attention to the fit.

Q: Are The Robots Offline?

A: Execution can be on-device for privacy and latency; reasoning and web queries use external services as needed. (The Verge)

Q: Can Any Robot Use These Models?

A: The design separates planning from execution, so, in theory, the reasoning model can produce plans for different robot bodies; execution needs body-specific adaptation. (Google DeepMind)

Q: Are These Models Safe?

A: A safety concern, groups make contingency plans, human-in-the-loop verification, and risk-averse rules for risky activities. Real-world deployment still involves hard testing. (Google Developers Blog)

Q: Is Human Domestic Work Over?

A: No. Augmentation, new maintenance careers, and service professions rather than wholesale replacement are to be expected in the near term.

Conclusion And Editorial Opinion: Cautious Optimism

Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 move robots from compliant tools to context-sensitive assistants. The leap is a big one because it changes what we can reasonably ask machines to do, not just isolated tasks but multi-step tasks involving discretion.

We should welcome the capability while insisting on strong guardrails. That is, rolled out in phases, with human oversight, audited toolchains, and open logs. The payoff is real: safer plants, better recycling, and small daily efficiencies that free human beings for better work.

If you’re a founder, operator, or builder, now is the time to experiment smartly. Start small. Measure intent and effect. Design for safety. The world where robots help with the small things arrives not as a blinding transformation, but as a sequence of careful, responsible pilots that prove value and manage risk. (Financial Times)