Artificial intelligence does not run out of control anymore. In 2026, the conversation shifts. Power is no longer in the hands of the creator of the fastest model or the biggest system. It belongs to whoever earns trust.

In boardrooms, parliaments, and world summits, a new reality is being formed. The innovation of AI is now proceeding with increased safety regulations, ethical boundaries, and accountability measures. Governments demand clarity. Business requires certainties. Citizens demand protection. This is what the next decade of AI is going to be. It is the first time that the most influential technology companies in the world and policymakers are heading in the same direction. (weforum)

In 2026, AI leadership belongs to those who earn trust through safety, ethics and accountability. (Image Source: LinkedIn)

The Necessary Truth: AI Trust Grew to a Competitive Edge

Trust is no longer a peripheral element. Trust will determine who rolls AI to scale in 2026 and who falls on the wrong side of regulators, customers, or citizen outcry. Companies that do not put safety and transparency first lose markets. Those who accept them receive credibility.

This change is the reason why global leaders in AI are less concerned with hype and more concerned with structure. Safety audits, risk assessments, model governance, and human oversight are no longer fringe benefits; they characterize the current use of AI.

Why 2026 is Not Like All the Other AI Booms

Past waves of AI followed a well-known script: build fast, release early, and fix problems later. That playbook is no longer effective. Artificial intelligence systems are now affecting elections, financial markets, health, and national security choices. A single failure will result in lawsuits, lost reputation, or government intervention.

In reaction, 2026 will provide a new phase: controlled acceleration. Progress is made, but on rails. And those guardrails are being constructed on the fly.

The Emergence of Global AI Safety Coalitions

Collaboration is one of the brightest indicators of change. Big AI creators are now at the same table. They exchange safety research, risk frameworks, and standards of operation. Such cooperation was impossible only several years ago. While competition still exists, sharing the responsibility requires the sharing of risks.

These coalitions focus on:

- Alignment and reliability of the model.

- Data integrity and consent.

- Eliminating abuse and fraud.

- Handling high-level deployment risks.

The point is clear: no individual company can handle global AI risk in solitude.

AI’s biggest players now collaborate on safety and risk, proving that global AI challenges require shared responsibility. (Image Source: Chatham House)

Governments Intrude Not to Kill Innovation, But to Mould It

Regulation in 2026 does not seek to kill innovation, contrary to popular fear. It aims to stabilise it. Governments realize that unregulated AI causes uncertainty for both businesses and citizens. Clear rules reduce fear, and predictable environments attract investment.

Policymakers are now focused on:

- Transparency obligations.

- Explainable decision-making.

- Clear liability structures.

- Mandatory risk disclosures.

The Australian Place in the Global AI Shift

Australia has a discreet and indirect role in this transition. Australian policymakers are interested in equilibrium, preferring innovation that is democratic, respects privacy expectations, and ensures consumer protection. This positioning has made Australia an appealing destination for enterprise AI pilots, healthcare and financial AI solutions, and research ethics partnerships. Australian companies consistently seek artificial intelligence they can comprehend, justify, and rely on.

Australia plays a quiet but influential role, backing AI innovation that balances progress with privacy, democracy and consumer protection. (Image Source: CFOtech Australia)

Enterprise AI Moves Into a Maturer Phase

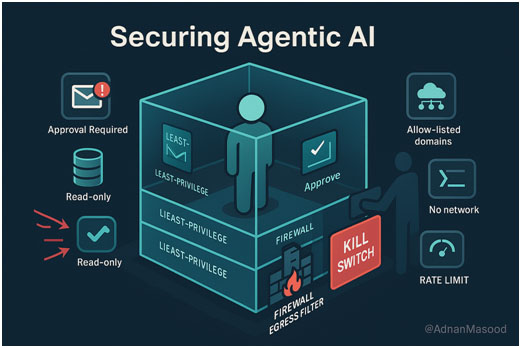

Within companies, the adoption of AI has a different appearance. By 2026, the question is no longer whether to use AI, but whether it can be trusted in production. Procurement teams now demand established systems of governance, clear documentation, ongoing monitoring tools, and human-in-the-loop safeguards. Artificial intelligence vendors who are not able to provide these lose out. Trust has become measurable.

The Reason Why Safety Now is the Driving Force of Innovation

Another trend appears in 2026: innovation is not hampered by safety; it is redirected by it. Constraints are introduced by developers initially when they design systems. They construct models that justify choices and curtail illusions. The industry has learned that untrustworthy AI does not sell. When one lacks trust, the innovation fails. (Forbes)

Black Boxes to Glass Boxes

Transparency is one of the most pronounced changes. Where AI models were previously used as black boxes that provided outputs without justification, 2026 sees the rise of glass box systems. These systems:

- Show confidence levels.

- Provide reasoning pathways.

- Allow human overrides.

- Log decisions for audits.

The Human Factor Back to the Centre

Although automation is feared, 2026 draws the focus back to humankind. Judgment is no longer substituted by AI; it is supported by it. By redesigning workflows, organizations make people answerable for ultimate decisions. Humans supervise while AI assists, ensuring responsibility stays clear.

Despite fears of automation, 2026 puts humans back in charge, with AI supporting judgment rather than replacing it. (Image Source: Medium)

Deepfakes and Digital Consent: New Horizons of Accountability

Deepfake content remains a pressing safety concern. In the US, legislation like the NO FAKES Act has been proposed to provide people with greater legal authority over their digital versions. Deepfakes pose real risks in:

- Elections and political persuasion.

- Legal procedures and court evidence.

- Reputations of celebrities and personalities.

- Scams and identity fraud.

Consent is at the heart of digital security. Loopholes in existing legislation are driving regulators to intervene through international collaboration.

A New Anatomy of AI Regulation: Soft Principles to Hard Law

The year 2026 is a turning point where voluntary ethics are replaced by binding law. Loose norms are becoming formalised regulation with actual punishments attached.

European Union

The EU AI Act categorizes systems by risk level. High-risk systems touching on health, credit, or justice must comply with standards on transparency and human supervision. Penalties can reach millions of euros or a percentage of global revenue.

Asia-Pacific

South Korea prepares to implement an AI Basic Act in January 2026, establishing a national compliance centre. Vietnam has also enacted its first law regarding artificial intelligence, focusing on risk management and human control.

Africa

Efforts are directed at making AI policy uphold the values of the African Union to safeguard citizens while facilitating innovation.

Economic Changes: Trust and Safety as the Engine of the Markets

Safety and governance are now primary factors in investment decisions. Studies show that 60 percent of large organisations base AI funding on regulatory readiness. Practically, this means:

- Technical feasibility is evaluated after legal and ethical risk assessments.

- Security and safety teams work in conjunction with R&D.

- Boards require reporting on risks, not just performance metrics.

Also Read: AI Makes Sleep a Disease-Prediction Tool of Modern Healthcare

Local Vs Cloud AI: A Change Of Strategy

A trend emerging in 2026 is the balance between local deployment and cloud services. Many organisations prefer systems running on local infrastructure for data privacy and safety. Local solutions ensure data does not leave the organisation and deliver real-time, auditable controls.

In 2026, organisations increasingly favour local AI systems to protect data, improve security and maintain full control. (Image Source: Techtweek Infotech)

What This Means for Creators, Developers and Ordinary Users

For Creators

Digital artists and filmmakers face questions regarding the protection of their labour against unlicensed reproduction. Metadata tagging and watermarking are becoming standard tools for asserting rights.

For Developers

Compliance is now built into innovation teams. Developers must evaluate social effects, prepare explainable models, and sketch system behaviour in edge cases.

For Everyday Users

People communicate with AI in safer ways. Social sites must explain synthetic content, and regulated industries must clarify decision-making processes. Users benefit from clearer privacy terms and increased control over their information.

Planning Into the Future: The Next Step After 2026

If 2026 is the year of building trust and safety as pillars of success, the following years will focus on scaling responsibly. Future priorities include international compatibility standards, transnational enforcement treaties, and new systems of human-AI synergy. The leading companies and countries will not only be the largest, but also the most trusted and accountable.

Frequently Asked Questions

- What will AI trust and safety mean in 2026?

Ans: It refers to AI systems designed with transparency, accountability and safeguards that reduce harm and misuse in real-world deployment. - What is driving increased AI regulation by governments?

Ans: AI’s direct impact on public welfare, economic stability and democratic processes has made regulation necessary to lower risk and build trust. - Does AI regulation reduce innovation?

Ans: Regulation guides innovation toward systems that can scale safely, responsibly and sustainably. - What impact does AI governance have on businesses?

Ans: It determines which AI tools companies can use legally and ethically, especially in highly regulated industries. - Will AI continue to develop rapidly in 2026?

Ans: However, progress now prioritises reliability, safety and trust alongside raw performance. - What do deepfake laws mean for creativity?

Ans: Effective laws balance individual rights with artistic expression, preventing identity abuse without restricting legitimate research or creative work. - Can global AI standards exist?

Ans: While progress varies by region, shared frameworks and harmonised risk classifications are increasingly gaining global traction.