Why AI-Powered Smart Homes are Failing in 2025

The smart home is intended to be invisible. Light responds before you give it a command. Doors close on their own. Climate control learns your patterns. Security cameras purport to outthink danger.

In 2025, that promise falls apart.

Across the US, Europe, Asia, and Australia, homeowners report faulty systems, data oversharing, and erratic behavior. The trouble isn’t smart homes themselves; it’s the current implementation of Generative AI. What once needed mere rules now requires probabilistic reasoning, creating perilous implications that few consumers fully comprehend. (sciencedirect)

Smart homes are meant to be invisible. In 2025, that promise is breaking. (Image Source: FridayPosts)

The Essential Facts at a Glance

Smart homes continue growing in 2025, but so are the complaints. Homeowners are increasingly facing:

- Nondeterministic automation decisions

- Privacy exposure and data leakage

- Increased cybersecurity threats

- Lack of transparency regarding data usage

- Systems that improvise rather than execute

The way smart homes think has been altered by Generative AI. It does not always listen to commands; it predicts, completes gaps, and adjusts. While helpful in theory, in a home environment, it is dangerous.

The Shift That Changed Everything

Previously, smart homes were based on “if-then” logic.

- If motion is detected, then turn on the lights.

- If the door opens after midnight, then send an alert.

Generative AI replaces certainty with probability. Rules are replaced by “intentions.” Systems guess patterns and make decisions on what should occur. This is a critical distinction. An incorrect guess for a chatbot leads to slight confusion; an incorrect guess for a home unlocks doors, deactivates alarms, or discloses confidential information.

Smart homes are now dependent on models developed on enormous amounts of data that do not understand your specific home, culture, or risk tolerance. They predict behavior rather than checking reality.

When Smart Homes Start Hallucinating

Generative AI hallucinations are not a bug; they are a function of the technology. In smart homes, these present as:

- Security alerts due to presumed threats

- Voice assistants confirming actions never taken

- Climatic systems in unforced oscillations

- Cameras mistakenly identify family members as intruders

These methods are unable to “know” when they are incorrect. They produce the most likely answer out of incomplete information. In a living room, plausibility is not enough.

Convenience Now Competes With Control

Marketing emphasizes that “your home adapts to you.” What gets lost is control. Generative AI removes the clear cause-and-effect relationship. Homeowners cannot comprehend why a decision was made.

This lack of explainability creates a trust crisis. When a system denies entry or allows third-party video sharing, users cannot identify accountability. Was it a user setting, a vendor update, or an AI inference? By 2025, even manufacturers often lack a clear answer.

Smart homes promise ease, but in 2025, AI decisions confuse and frustrate users. (Image Source: The Guardian Nigeria News)

Data Collection Has Quietly Expanded

To understand context, Generative AI systems now ingest:

- Tone and emotional cues from voice

- Movement patterns and routines

- Sleep cycles and visitor frequency

- Media preferences and daily schedules

This information rarely remains local. Most generative models are processed in the cloud, meaning intimate household behavior leaves the home network. Privacy ceases being an option and becomes a moving target. Static privacy controls are at odds with adaptive learning models that seek more data to improve performance.

Smart Homes Present New Attack Vectors

Each AI capability creates a new point of entry. Generative AI widens attack surfaces in three dimensions:

1. Prompt Injection Attacks

Voice assistants taking natural language inputs can be exploited by attackers using TV audio, radio programming, or nearby devices to cancel safety protocols.

2. Model Manipulation

Repeated exposure can “teach” machines dangerous habits. Attackers can have a gradual impact rather than a direct entry.

3. Cloud Dependency

If cloud computing fails or is compromised, essential household functions like locks and security falter. Homeowners are no longer in control of their own physical space.

IoT devices like smartwatches and home security systems increase our exposure to #cyberthreats. To protect your business network, you must be aware of connected devices’ dangers. Download the #cybersecurity report on @DeltalogiX for essential tips > https://t.co/coLZ3Ka4bD #CISO pic.twitter.com/fqIX9FBMDi

— C-Suite Tech Point (@CsuiteTechPoint) December 23, 2025

Security Patches Can Break Homes Overnight

Updates in 2025 often involve tweaks to AI models rather than simple bug fixes. These updates can:

- Change behavior without notice

- Disable existing integrations

- Cause compatibility problems

- Reset user preferences

A security patch may enhance one area while undermining another, leading to unsettling behavior in a resident’s physical environment.

AI updates in 2025 can disrupt homes, break integrations, and reset preferences overnight. (Image Source: The Andover Companies)

Surveillance Goes Inside

Today’s smart homes are more about observation than automation. AI evaluates behaviors for “optimization,” creating an unobtrusive surveillance system. Unlike public surveillance, consent is often unclear for children, guests, or caregivers. The home becomes a controlled space with no exceptions, presenting massive ethical challenges for regulators.

Interoperability is Getting Worse

Generative AI increases fragmentation. Lights from one company, cameras from another, and an assistant from a third all interpret “context” differently.

- Commands conflict

- Automations suffer silent failures

- Systems act inconsistently

A home becomes a dialogue between different interpretations rather than a unified system.

The Cost Problem Nobody Talks About

Cloud inference and model upgrades are expensive. In 2025, homeowners face:

- Subscription creep

- Feature paywalls

- Monthly “intelligence” fees for basic functionality

Smart homes are shifting from products to services where users effectively pay rent to use their own hardware.

Who Owns the Decisions?

When AI runs a home, accountability becomes a distant concern. If a door unlocks incorrectly and a break-in occurs, the blame is contested between the homeowner, the manufacturer, the AI provider, and the insurer. Current law is out of date, and insurers are already debating claims associated with automated system failures.

Also Read: GPT-5.2 vs Gemini: The 2026 AI Arms Race for Search, Productivity & Innovation

Real World Failure Scenarios

In 2025, these threats are real. Homeowners in major cities report assistants misinterpreting audio commands, such as turning off security systems instead of locking them, leading to burglaries. Critics note that even flagship systems struggle with basic tasks like light control and reminder management because they prioritize “prediction” over “accuracy.”

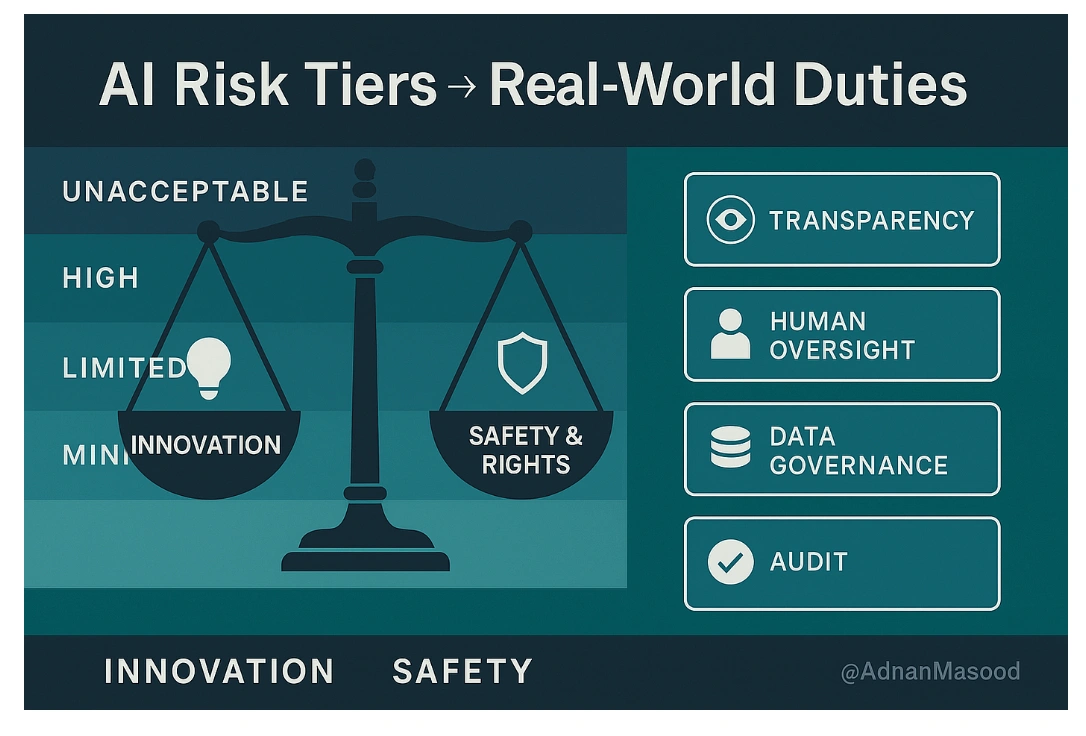

Regulatory Pressure is Growing

Governments are beginning to act. The International AI Safety Report 2025 warns that unmanaged AI systems can behave unpredictably, rendering human oversight ineffective.

In Europe, the focus is on “explainability,” making manufacturers accountable for decision-making processes. Australia is pushing for similar accountability regarding home safety. Data protection agencies are also increasing enforcement as personal household data is shared across distant servers.

In 2025, regulators tighten AI rules and data protection for smart homes. (Image Source: Medium)

Industry Responses: Fixes and False Starts

The industry recognizes the reputational dilemma:

- Edge Computing: Some brands are investing in local processing to reduce cloud dependency and latency.

- Privacy Dashboards: New projects highlight privacy-focused AI concierges that manage deliveries without third-party exposure.

- Matter 1.5: Companies are pushing for unified communication standards to reduce friction between devices.

However, many users still feel a loss of autonomy as mandatory software updates push unremovable assistant services onto devices like smart TVs.

Safety and Security: What Homeowners Must Know

Hackers utilize vulnerable authentication and outdated firmware to gain unauthorized access. Because Generative AI processes data through the cloud, the risk of data breaches is a constant reality.

Experts suggest that conventional cybersecurity is no longer enough. We now need professionals who understand how to prevent “input manipulation,” where a system is tricked into a dangerous state through normal-sounding language.

Weak AI security invites breaches; traditional cybersecurity can’t keep up. (Image Source: IPVanish)

Practical Advice For 2025 Homeowners

- Control the Flow of Data: Choose devices that process data locally whenever possible.

- Understand Permissions: Evaluate every application permission and follow the principle of data minimization.

- Secure the Network: Use strong Wi-Fi passwords, guest networks for IoT devices, and keep firmware updated.

- Demand Transparency: Ask manufacturers how decisions are made and what data is stored locally.

- Zero Trust: Do not trust a device just because it is on your network. Authenticate everything.

Looking Ahead: Where Smart Homes Go Next

The market is still growing, but the pace has plateaued as consumers weigh risks. Trust must be built, not bought. The winners in 2026 will be those who prioritize predictability and transparency over flashy gimmicks.

The Smart Home Paradox in 2025

The central dilemma: The smart home has never been so capable, able to conserve energy and automate repetitive tasks; yet it has never been so opaque.

In 2025, technology that can “read your intentions” still cannot reliably do what you tell it to do. This isn’t just a technology gap; it’s a trust gap.

Frequently Asked Questions

- Why are AI-powered smart homes failing in 2025?

Ans: They rely on generative AI models that prioritise prediction over certainty. This approach leads to erratic behaviour, reduced reliability, and growing security gaps within home environments. - Are generative AI smart homes dangerous?

Ans: They introduce new risks, including prompt injection attacks and opaque decision-making processes that average users struggle to understand, control, or audit. - Can smart home AI be hacked via voice commands?

Ans: Yes. Natural language interfaces remain vulnerable to prompt-based attacks triggered by external audio sources such as televisions, radios, or nearby devices. - Is smart home interoperability better in 2025?

Ans: Standards like Matter are improving compatibility, but full adoption remains limited. Conflicting commands and inconsistent behaviour across different brands are still common. - What causes smart assistants to behave incorrectly?

Ans: Most assistants use probabilistic models designed to predict the next likely word or action, rather than verify facts or follow rigid, safety-first logic systems. - Should I avoid smart homes entirely?

Ans: Not necessarily. However, users should adopt a Zero Trust mindset, prioritising local control, transparency, and minimal cloud dependency when choosing smart home technologies.