In 2025, artificial intelligence is not science fiction—it’s the infrastructure of modern life. From the news you read and the jobs you apply for to the way your healthcare is delivered, AI is shaping decisions that affect billions of people. Despite its influence, most of the public still lacks a fundamental understanding of what AI is and how it works. This divide, known as AI illiteracy, is quickly becoming one of the most urgent challenges of our digital era.

AI is not inherently dangerous, but ignorance about its mechanisms, biases, and limits can be. In this landscape, bridging the AI literacy gap is not a luxury—it’s a civic necessity.

Understanding the Technology: What AI Is—and Isn’t

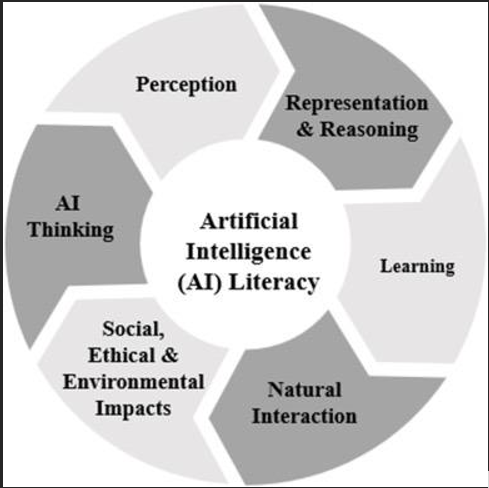

The six big ideas of the AI literacy intelligence-based curriculum for primary school students (Yim, 2023).

Before discussing the societal implications, it’s vital to grasp the basics of how AI works. AI, especially large language models (LLMs) like ChatGPT, operates by identifying statistical patterns in massive datasets. These models generate text, analyze images, and even create videos—not through “thinking,” but through predictive algorithms trained on past data.

Despite media narratives, AI does not possess consciousness, emotions, or understanding. It does not “know” anything in a human sense—it calculates probabilities. Yet, many users interact with AI as if it were sentient, leading to unrealistic expectations and misinformed reliance.

The Real-World Consequences of AI Illiteracy

1. Misinformation and the Algorithmic Echo Chamber

AI-powered recommendation engines on platforms like YouTube, TikTok, Facebook, and X (formerly Twitter) prioritize content that keeps users scrolling. This content is often emotionally provocative, sensationalized, or polarizing. In a world where viral content trumps verified content, AI can unintentionally amplify misinformation, disinformation, and conspiracy theories.

The result? Entire populations can fall into echo chambers where they are repeatedly exposed to misleading content, reinforcing existing biases and eroding public trust in scientific facts, journalism, and institutions.

2. Algorithmic Bias and Systemic Inequality

AI models reflect the biases present in their training data. If that data contains historical inequalities—based on race, gender, class, or location—the AI will likely reproduce and amplify them.

For instance:

- Facial recognition systems have shown significantly lower accuracy for people with darker skin tones.

- AI tools used in U.S. courts have been found to overestimate recidivism risk for Black defendants.

- Hiring algorithms trained on biased historical data may inadvertently prefer male candidates for leadership roles.

Without widespread literacy, these issues remain invisible to the average user—yet their impact can be life-changing.

3. The Digital Divide: Who Benefits from AI?

While Silicon Valley races to build the next breakthrough in AI, large swaths of the global population are still without reliable internet access or even basic digital literacy. The digital divide is quickly becoming an algorithmic divide.

People without access to or understanding of AI tools:

- Miss out on education and job opportunities

- Cannot participate fully in democratic processes

- Become targets of manipulation without knowing it

In contrast, those with access and literacy gain economic advantages, reinforcing an AI-driven form of global inequality.

The Need for Widespread AI Literacy

If AI is to serve society equitably, we must cultivate a public that understands AI—not at a deep technical level, but enough to question, critique, and engage with it responsibly.

Integrating AI Education from Early On

Education systems must urgently evolve. AI shouldn’t be reserved for engineering students or tech professionals; it must be woven into school curricula, starting from middle school.

Key components of AI literacy education:

- Understanding how algorithms work

- Recognizing algorithmic bias and ethical risks

- Knowing how AI influences media, politics, and behavior

- Developing critical thinking to question algorithmic decisions

Finland, for example, launched a successful nationwide initiative called Elements of AI—a free online course aimed at teaching basic AI concepts to its citizens. Other countries must follow suit.

Public Awareness and Community Outreach

Beyond schools, community centers, libraries, and NGOs can play a pivotal role in making AI education more inclusive. This includes:

- Hosting free AI workshops for parents and seniors

- Distributing educational content in regional languages

- Partnering with local media to run awareness campaigns

Think of it this way: if AI is shaping your future, shouldn’t you understand how it works?

Also Read: Circle’s IPO Ushers in a New Era for Crypto’s Public Market Presence

Ethical AI: We Need More Than Regulation

While AI literacy empowers the public, it must be paired with ethical responsibility from developers, corporations, and governments.

Three pillars of responsible AI use:

- Transparency – Users should know when they’re interacting with AI and understand how it works.

- Accountability – Developers must be accountable for harmful outcomes caused by AI models.

- Inclusivity – AI must be developed and tested across diverse datasets to reduce bias and promote equity.

Companies must also disclose training data sources, explain decision-making processes, and provide opt-out options where AI is being used.

How AI-Literate Citizens Can Shape the Future

An informed public can:

- Demand fair AI practices from tech companies

- Spot deepfakes and disinformation before they go viral

- Use AI tools responsibly to enhance their lives and careers

- Hold governments accountable for surveillance and privacy abuses

Most importantly, AI-literate individuals are less likely to be manipulated and more likely to participate in shaping a future where technology serves humanity—not the other way around.

Conclusion: From Passive Users to Active Participants

The AI revolution is not coming. It’s here.

But knowledge is power. And in this era of exponential innovation, AI literacy is the foundation of digital citizenship. Just as basic reading and writing are essential for participation in modern society, so too is a working knowledge of AI systems.

If we want a future where technology is used ethically, inclusively, and intelligently, we must start by closing the AI literacy gap—now. The more we understand it, the more we can shape it, control it, and use it for good.