Goldman Sachs does not need to be debating with a bot anymore, but delegating the work, and it is doing so with Anthropic.

On Friday, 6 February 2026, Goldman confirms that it is constructing AI-powered agents with Anthropic to automate internal banking processes like trade and transaction accounting, client due diligence, and onboarding. Anthropic engineers directly interact with Goldman teams and the work is ongoing, which has been in place for approximately 6 months already. The objective is simple: reduce the time spent on reconciliation of trades, checking of clients and opening accounts.

This would be autonomy instead of sounding like normal automation. These applications do not merely imply text. They operate within a workflow: they read documents, retrieve data in internal systems, invoke rules within guardrails and direct work to the subsequent step. That is important in banking since the back office is the place where minor mistakes turn into major problems and where a compliance project silently swallows up its own days.

Goldman Sachs is using Anthropic AI agents to automate back-office tasks like accounting, due diligence and onboarding. (Image Source: Fortune)

The Reason This Story is Trending Currently

The concept of the AI agent is moving from demos to controlled and high-stakes work. The move by Goldman comes at a time when other players in the market are moving agent infrastructure into the center stage. Individually, OpenAI has released enterprise tooling to assist organisations in creating and managing AI agents, a sign that agent management is emerging as an aspect, rather than an attribute.

This is why this headline is viral because of that timing. It’s not “AI writes faster”. It’s “AI starts doing”.

What Goldman is in Fact Constructing

The concept of AI agents may be unclear, and this is the realistic image of the case: a digital co-worker who has a limited job description.

The initial targets of Goldman are located in the sections of a bank that are not in front of customers:

- Trade and transaction accounting: In which teams balance records, break and close book resolutions.

- Client due diligence: In which analysts verify identity documentation, the ownership arrangements and risk indications.

- Onboarding: Systems and human beings align approvals, set up, and observe rules.

These functions are rule-heavy and constant, and repeatable. They are also the creators of the documentation that the regulators expect a bank to maintain.

The CIO of Goldman, Marco Argenti, indicates tthat he most important aspect of the bank is time. When an agent shortens multi-step checks from hours to minutes, the bank does not save money alone. It is quicker in responding to client requests, market moves and compliance inquiries.

What is the Difference Between this and Using AI at Work?

The majority of AI used in the workplace is still the dumb autocomplete. It assists you in writing a memo, report on a meeting or writing code more quickly. You remain the operator.

An agent changes the posture. You shift to write this to do this and the system takes action.

Imagine a simplified boarding request:

- The client documents are uploaded by a relationship manager.

- There are vital fields that are extracted by an agent (names, ownership, addresses, directors).

- It compares sanctions and watchlists.

- It indicates discrepancies or lost items.

- It creates a case file and provides evidence links and sends it to the human reviewer.

- Upon approval, it initiates the right internal configurations and monitoring regulations.

That isn’t a chat. It’s a workflow.

This is also the reason why enterprise tooling is important. As vendors discuss agents, a lot of businesses now request the other missing component: how to safely deal with them in large numbers. According to TechCrunch, OpenAI is entering the business of managing agents and positioning it as enterprise infrastructure to adopt.

Why Banking is the Testing Ground

Banks love efficiency. They also love control. Instead, these instincts clash as an AI system begins to make actions.

Why then should banking be a proving ground? Due to the fact that the banking affairs are already conducted like assembly lines. Each stage has its inputs, outputs, approvals and logs. The latter structure simplifies the process of agent insertion, impact measurement, and rapid failure identification.

It also forces discipline. On day one, model risk teams, compliance, and internal audit provide the same questions:

- What are the sources on which the agent touches?

- What tools can it trigger?

- What happens when it is wrong?

- Will we be able to repeat its steps in the next month?

- Is it explainable to a regulator, its decisions?

In case the system is unable to provide answers to such questions, it is not shipped.

Banks want speed and control, so AI agents face tough scrutiny. Banking’s structured, logged workflows make it ideal to test them and if actions can’t be traced, they don’t ship. (Image Source: InvestmentNews)

The Human Narrative of the Trend

Bank operations appear in the form of queues, checklists and handoffs. One of them is in pursuit of a lost document. A reconciliation break is solved by somebody. One responds to a can you confirm text due to a deadline that is looming.

Now add an agent.

The agent simply pre-processes a queue and yields a shortlist: they look clean, they require a human, they look risky – escalate.

That’s where the emotion sits. Not in hype. In the ease of fewer dead-ends– and the stress of doing your approval is still your name on the line.

When you are writing to experts and non-experts, this is your compromise: it is not that agents come in like sci-fi robots. They come in the form of queue-clearers. And queues are where organisations slowly bleed time.

The Reason Claude is Important and Why it May Not Be the Last Model

The partnership Goldman runs on the Claude models of Anthropic, which Anthem pitches as being business-oriented and safety-conscious. This feature resonates in finance, where the risk is the vernacular of all.

The broader indication is structural: a universal bank treats foundation models as infrastructure, such as databases or cloud offerings. The option is not permanent. It establishes an age of cost testing, speed tests, accuracy and governance tests on a bank platform, which is then swapped as the economic condition alters.

Goldman is using Claude for its business-ready, safety-first design, but the bigger point is this: banks treat models like infrastructure and will switch as costs and performance change. (Image Source: TechRadar)

Stated differently, the model is a constituent. Its surrounding system becomes a benefit.

The Actual Technical Challenge: Business, Not Solutions

Text is easy. Actions are hard.

A draughting agent makes an email typing error. A transaction triggering agent may have a problem that can only be unwound in weeks.

Thus, banks prefer to be built in layers:

- Summarisation, extraction and classification of read-only agents.

- Proposers of decisions that must be approved.

- Running on narrow tasks with tight guardrails, recording and rollback action agents.

The reported use cases of Goldman can fit the middle layer. They consist of choices, although they have checkpoints.

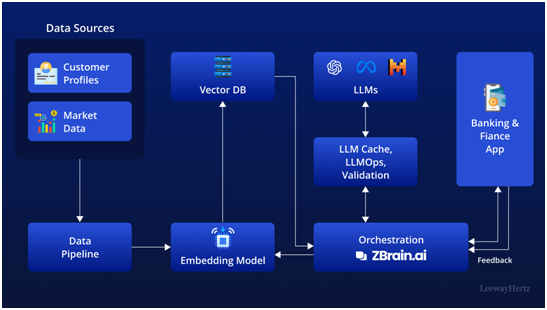

It is also here that agent management is required. Enterprises need a way to:

- Configure permissions (this agent reads these systems, not those).

- Track performance (correctness, time-saving, error rates)

- Test (stop it quickly when it acts strangely)

- Store government (histories of requests, tool calls and sanctions)

Innovation can be seen in the frenzy to develop that scaffolding in new enterprise products and releases in the industry.

The Trust Battle: Rapidity Versus Demonstration

AI agents sell speed. The industries that are regulated require proof.

An agent needs to generate: to be employed in banking.

- Evidence: Access to sources utilized.

- Traceability: An account of the steps followed.

- Controls: Approvals and permissions.

- Repeatability: The capacity of repeating results.

The market is being molded to that need. In 2026, the victors will be those teams that cease to apply AI as a merely temporary application: designed, monitored, and refined.

AI agents offer speed, but banking demands proof: evidence, traceable steps, strict controls, and repeatable results. (Image Source: LeewayHertz)

This Has an Implication on Australia at the Moment

And whether you are in finance or not, you see that Goldman is on the move. It is no longer a question of whether or not agents will come. It’s “where do they land first?”

The least safe point in the case of Australian organisations is that of a well-defined queue with clear inputs and outputs that would be onboarding packs, reconciliations, claims triage, or compliance review backlogs. Define the guardrails. Keep a human reviewer. Compare pre- and post-measure results. Continue expansion when the logs and controls stand.

What the Market Watches Next

Within the following weeks, there are three themes that prevail in the agent conversation.

First, permissioning is the feature. When the firm is referred to as an agent, the second question is what the agent can do. Gravity deployments are written in: named system read access, limited use of tools, obligatory authorization of risky actions, and logs of all actions taken.

Second, the pressure of pricing drops quickly. Unless you automate the back office, it will appear to be cheap. Banks begin to trade off cost per case, not cost per token and this drives vendors to trade off throughput.

Third, evidence turns into a product. The audit trail is often the most valuable, and it can endure a regulator, court or incident review.

It is not just the banks that have this momentum. Agents tried by large organisations within workflows are also aimed at offloading repetitive tasks within an organisation, without holding humans responsible.

Within the Agent Stack: Why is it Feasible in a Bank

When individuals mention the term AI agent, they visualize an additional confident chatbot. The move is an indication of something more practical: a reason, checking, and routing workflow engine by Goldman.

The only way an agent can perform successfully in a bank is when it does not act as a speculative operator. It analyses documents, extracts organized information, compares and contrasts them, and suggests an evidence-based result. Once it begins to guess without receipts, then it becomes a liability.

That is the reason why Goldman targets the initial wave of operations work already operating on rules: trade and transaction accounting, due diligence, and onboarding. They are lanes that can be repeated and audited, and where you can easily measure improvement.

The Three Layers Which Banks Construct and the Reason for it

The greatest accomplishment is always preceded by a ladder:

1) Extract + summarise (low risk) The agent extracts fields in PDFs, emails, forms, and internal notes. It labels and connects sources and labeling.

2) Recommend + flag (medium risk). It detects missing objects, discrepancies or red flags. It suggests a decision, but one that must be signed by a human being.

3) Act + log (higher risk) It initiates certified actions: open a case, order a document, request a workflow ticket, update a record – very strictly authorized and keeping complete logs.

The concentration that is reported by Goldman has been in the centre of the first and second layer and that is precisely where the banks lose the most time.

The Relevance of This Story Today: The Agent Management Arms Race

Goldman is not the only one to push the agents into the real working process. The market is changing to platforms that manage agents rather than models that speak.

OpenAI is releasing an enterprise product this week, which allows organisations to create and control agents, such as permission controls and integrations with external apps and data. That it is time: agent governance has become a first-class requirement and is no longer an afterthought by businesses.

That is why the reason is so simple: a text-generating model can embarrass you. A doing agent is capable of breaking things.

Therefore, the discussion shifts to “which model is the brightest one? to:

- Which platform is to be trusted with permissions?

- Which one leaves audit trails that pass the test?

- Which one allows you to suspend agents immediately?

- Which one keeps man in the loop when taking sensitive steps?

That is infrastructure running behind the headline.

The Philosophical Question That Everybody Puts Across Is, How Do We Know It Is So?

Finance doesn’t reward magic. It rewards proof.

Banks must be able to know who approved what, which data the agent was handling, what actions it took, and what data it has to use to support the result, so that they can roll out agents safely.

This is not a hypothetical thing in Australia. APRA indicates that it is already evaluating upcoming risks of AI and intends to conduct more specific supervisory interactions with larger participants in order to comprehend AI risk management and supervision practices.

That one fact alters the way that leaders design projects. They do not ask, “Is this automatable? They ask, “Can we defend this?”

The Governance Deficit Regulators Continue To Censure

The application of AI in financial services can make the governance lag behind, as has already been emphasized by ASIC. Its report on governance setups in the presence of AI innovation (REP 798) examines how the licensees use and intend to use AI, and the controls they implement.

Even ASIC messages emphasize that AI has positive effects, but can increase threats to consumers and the integrity of the market unless organisations handle it with care.

When you are writing this narrative, do not make it a technology improvement. Make it a contest between automation and governance.

ASIC warns AI can outpace governance in finance. REP 798 highlights the need for strong controls, so frame this as automation versus governance, not just a tech upgrade. (Image Source: The National Law Review)

What is Different Within the Bank: Not Only the Number of Heads

This is what goes on behind the scenes; most of the operational teams do not drown due to the work being hard. It kills them as it is interminable.

Agents attack the interminable sections:

- Collecting documents

- Filling fields

- Verifying details

- Chasing missing items

- Writing case notes

- Building evidence packs

This transforms the day of human beings. Human beings cease to be data entry operators and become exception handlers.

In practice, that means:

- More judgment calls

- More escalation decisions

- Increased accountability levels per decision

- Increased time off the copy, paste, check, repeat treadmill

It is a nice but uncomfortable change. Good since it helps to minimize busywork. Discomforting as it urges human beings to make higher-stakes decisions.

The Actual Payoff: Speed, Reduced Errors and Less Stressful Deadlines

The majority of companies market ROI as labour savings. The sharper value is in operation in the banking sector:

- Faster cycle times: Onboarding becomes faster when agents complete their checks and identify gaps at an early stage.

- Reduced reconciliation breakages: When problems are identified by the agent, the accounting teams do not spend much time searching for the mismatched records.

- Cleaner compliance files: As the agent gathers evidence throughout the process, the process of review becomes easier – and the audit becomes less unpleasant.

The CIO of Goldman states the purpose of the task is to minimize the time of these working processes, which directly refers to the cycle time and throughput among the success metrics.

Next Thing to Watch: The Five Signs That Will Convince You That This is Real

Much AI activity remains in press releases. The following are the indications that distinguish actual deployments and hype:

- Named workflows, rather than promises: Goldman gives specific objectives: accounting, due diligence, and onboarding.

- Human checkpoints: In the event a vendor is unable to explain approval gates, it is not prepared to work under regulation.

- Tool permissions: The organisation determines what systems the agent has access to read, write, and activate – and secures it.

- Full logs and replay: The organisation is able to rebuild the way an agent achieved an outcome several weeks later.

- Incident playbooks: Groups specify their actions when the agent acts strangely: pause, quarantine, roll back, review.

In order to have the article feel like an expert but not to put people off, anchor the story on these signals. They make the topic tangible.

The Way The Same Playbook Can Be Applied To Australian Organisations

Although you are not in the banking business, you probably have workflows that share similarities with the banking business: onboarding, compliance, payments, reconciliations, claims, and procurement.

A secure rollout would resemble the following:

- Step 1: Choose a queue where the inputs are visible. Select a process that has structured results: approve/reject / request info.

- Step 2: Become an assistant to the agent. Begin with the extraction and summarisation. Take steps as read-only until you develop trust.

- Step 3: Add a “risk lens”. Train it to draw warning signs for missing documents, name mismatch or conflicting dates.

- Step 4: Add approvals. Allow the agent to write advice and evidence. A human approves.

- Step 5: Add narrow actions. When the logs appear to be solid, then they can create tickets, make requests, or amend records.

- Step 6: Measure ruthlessly. Track cycle time, error rate, rework rate, escalations and the customer wait time.

This structure is congruent with the trend in Australian governance discussion, in which organisations pursue responsible adoption, explicit oversight, and scaling risk controls.

Also Read: Britain’s AI Compute Race: The UK Supercharges Cambridge’s DAWN and the Startup Boom That Follows

The Bigger Trend Line: AI6 And Responsible Adoption Drive

Beyond the large banks, the discourse of governance in Australia narrows as well. The advice outlined by the National AI Centre (which is also used as a model of AI adoption) encourages leaders to develop common practices of governance within the sector. According to the commentary provided by the professional community in Australia, this guidance assists organisations in creating a shared approach to responsible AI.

You can anchor your article on this: workflow is not merely changed by agents, but compels organisations to become more mature in their governance.

Conclusion: The Silent Revolution Goes Behind The Scenes

This narrative is not of AI typing e-mails. It concerns AI operating in the piping of contemporary finance.

Goldman and Anthropic being partners puts the spotlight on where the agent wave strikes first, i.e., the bulk of operational work that makes a bank experience either a fast or frustrating experience.

To readers, the lesson learnt is practical. Agents are not so far down the road. They are already situated within workflows and those who win are the teams that combine automation with governance, permission, logs, approvals and accountable visibility.

Frequently Asked Questions

- What is an AI agent in banking operations?

Ans: An AI agent is software that completes multi-step tasks, such as extracting information, applying rules, generating case files, and routing approvals, rather than only answering questions. - What is the difference between an AI agent and a chatbot?

Ans: A chatbot talks. An agent works across systems: it reads documents, verifies data, and forwards tasks based on permissions and approvals. - What banking functions are currently appropriate for AI agents?

Ans: Processes with clear steps and rules, such as onboarding checks, due diligence packs, transaction accounting reconciliation, and exception triage. - Do agents replace bank staff?

Ans: They reduce repetitive work and shift people towards exception handling, judgment-based decisions, and supervision. Most banks treat early deployments as support, not direct replacement. - What prevents an agent from engaging in dangerous actions?

Ans: Authorisations, access controls, and logging. Companies want platforms that limit what agents can see and do, and keep actions traceable. - What are the areas of concern for regulators in Australia?

Ans: Governance, oversight, and risk management. APRA flags supervisory focus on emerging AI risks, and ASIC highlights governance arrangements as AI adoption expands. - How do you measure the ROI of AI agents?

Ans: Track cycle time, re-work rate, error rates, escalations, staff time per case, and customer wait time, not just “time saved”. - What is the highest hidden cost?

Ans: Quality control. You need monitoring, audits, and an incident response plan, or costs return as rework and compliance risk. - What is the largest competitive strength?

Ans: Speed with proof, moving faster while still being able to justify decisions with evidence. - What follows Goldman’s move?

Ans: More banks trial agents in back-office workflows, while vendors compete on agent management, auditability, and integration, not only model intelligence.