Artificial intelligence is already embedded in the financial system. Banks use it to approve loans. Insurers rely on it to price risk. Investment firms deploy it to trade markets at a speed humans cannot match.

And now, UK regulators are raising a red flag. The message is direct: AI is moving faster than financial safeguards. If oversight does not keep up, the consequences could hit consumers first and the wider financial system next. (Reuters)

This warning sits at the centre of a growing dilemma facing the UK government and the Bank of England: How do you encourage innovation without exposing millions to invisible, automated risk?

AI now drives loan approvals and high-speed trading, but UK regulators warn that safeguards are struggling to keep up. (Image Source: Professional Paraplanner)

The Essential Facts: Why Regulators Are Concerned Right Now

UK financial regulators are not calling for an AI shutdown. They are calling for control. Recent regulatory briefings and parliamentary discussions highlight three immediate risks:

- Unclear accountability when AI systems make harmful decisions

- Bias and discrimination embedded in automated financial models

- Systemic risk, where identical AI systems behave the same way at the same time

The concern is not theoretical. AI is already influencing credit approvals, fraud detection, pricing, customer service, and investment decisions across UK finance. When these systems fail, they do so at scale.

AI is No Longer Experimental in Finance

This is not a future problem. AI systems in UK finance are live, operational, and learning in real time.

- Banks use machine learning to decide who qualifies for a mortgage.

- Fintech platforms deploy algorithms to approve loans in minutes.

- Trading firms rely on automated models to respond to market signals instantly.

Each decision may look small in isolation. Together, they shape access to money, trust in institutions, and financial stability. That scale is what worries regulators.

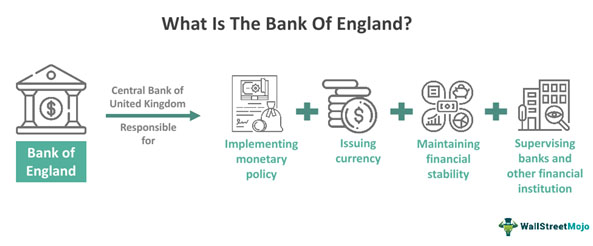

Why the Bank of England is Paying Attention

The Bank of England’s role goes beyond consumer protection. Its core responsibility is financial stability. AI introduces new forms of risk that traditional stress tests do not fully capture:

- Models trained on biased or outdated data: Leading to incorrect market assumptions.

- Feedback loops: Where AI reacts to its own outputs, creating artificial trends.

- Concentration risk: When many firms use similar systems, creating a single point of failure.

If multiple institutions rely on similar AI tools, a single flaw can ripple across markets. This is how local errors become systemic events.

The Bank of England prioritises financial stability as AI introduces risks that traditional stress tests often miss, where small flaws can trigger wider market shocks. (Image Source: WallStreetMojo)

The Regulatory Gap No One Can Ignore

UK regulators currently rely on a principles-based approach. Instead of strict AI-specific laws, firms are expected to follow existing rules around fairness, accountability, and transparency. That approach worked when automation was limited. It struggles when AI systems become self-learning, opaque, and vendor-supplied (making them difficult to audit).

The question regulators now face is simple but uncomfortable: Can principles alone protect consumers in an AI-driven financial system?

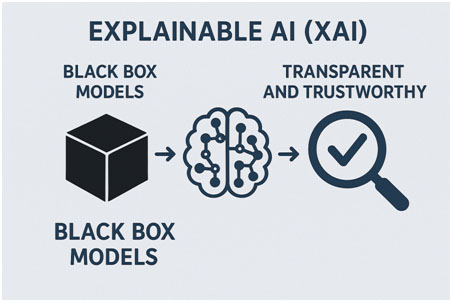

The Problem of Black Box Decisions

One of the biggest challenges is explainability. Many AI models cannot clearly explain how they reached a decision. That creates tension with financial law. Consumers have the right to understand why they were denied credit. Regulators must be able to audit decisions after harm occurs. When AI cannot explain itself, accountability breaks down.

Right now, the answer is not always clear regarding who is responsible:

- The bank?

- The software provider?

- The data supplier?

A key challenge is explainability: when AI can’t show how decisions are made, accountability becomes unclear. (Image Source: Medium)

Bias: When Automation Amplifies Inequality

AI systems learn from historical data. If that data reflects bias, the system absorbs it. This matters deeply in finance. Small biases in credit scoring can exclude entire groups from access to loans. Insurance pricing models can quietly penalise certain postcodes or demographics. Fraud detection systems can unfairly target specific behaviours. These issues often surface only after damage occurs. By then, thousands of decisions may already be made.

Why Speed is the Real Risk Multiplier

Traditional financial mistakes unfold slowly. AI mistakes unfold instantly. A flawed lending model can reject thousands of applications in hours. A trading algorithm can amplify volatility in seconds. A customer service AI can give harmful financial advice at scale. Speed turns minor errors into major events. Regulators understand this and they are uneasy.

Innovation Versus Protection: the Core Dilemma

The UK wants to remain a global fintech hub. Over-regulation risks driving innovation elsewhere. Under-regulation risks consumer harm and financial instability. This tension defines the current debate. Industry leaders argue flexibility is essential; regulators argue safeguards must exist before harm occurs. Both sides agree on one thing: AI is now too important to ignore.

How UK Firms Are Responding

Many financial institutions are already adjusting their approach. Some are introducing internal AI governance boards. Others are slowing deployment until models pass stricter tests. Large banks increasingly demand transparency from AI vendors.

But smaller firms face tougher choices. Startups rely on speed to compete. Compliance adds cost. Delays reduce advantage. This creates uneven risk across the sector.

The Global Context Makes This Urgent

The UK is not alone in this debate. The European Union is moving towards stricter AI rules. The United States is tightening oversight through regulators rather than legislation. Asian markets are experimenting with hybrid models. Financial systems are global. If AI regulation diverges too far, risk migrates across borders. UK regulators know coordination matters.

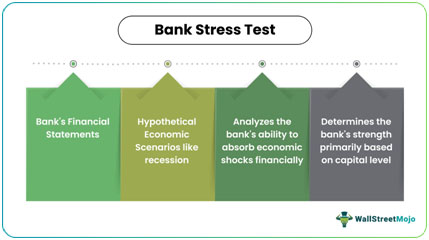

The Reason Stress Tests Might Become a Game-Changer

Bank stress tests that have been in use long enough to determine whether a bank can withstand major economic jolts are familiar to regulators and markets. They look at the credit losses, liquidity problems and extreme events like sudden increases in interest rates.

The UK lawmakers now suggest another form of test: customary stress tests targeting AI systems themselves. It is said that regulators must understand how automated decision engines perform when subjected to pressure, before an actual crisis.

Consider a scenario where a lot of banks have the same automated credit scoring systems. Any economic snap may cause all models to tighten credit simultaneously, withholding loans to a large pool of borrowers. That would only worsen a recession rather than alleviate it.

UK lawmakers propose AI stress tests to see how automated credit systems behave under pressure, preventing simultaneous failures that could worsen a downturn. (Image Source: WallStreetMojo)

Beyond the Bottom Line: Who Owns the AI?

Among the biggest regulatory issues, there is the responsibility of automated decisions. With a human decision maker, the person can be held accountable, but when an automated system decides, causing harm to a customer or a malfunction in a market, things become gray.

It is obvious in the recent discourse. The FCA and the Bank of England in the UK are of the opinion that current regulations, including consumer protection and principles-based regulation, are sufficient. However, according to the Treasury Committee, that may not be sufficient. It desires explicit regulations regarding who is liable and the flow of information in case automated decision systems are deployed.

The Reason Why Regulators Are Afraid of Herding and Market Volatility

Computerized models usually train on similar data sources and count on stimuli that human beings can hardly perceive. It implies that numerous systems may act very much in the same way when placed under stress, even when they were module-built.

The lawmakers and analysts claim that this homogeneity may actually increase market moves rather than make them smoother. Such a herding behaviour of programs imitating the reaction of others may cause shockwaves in the markets, forcing prices high or low in a rapid and drastic way.

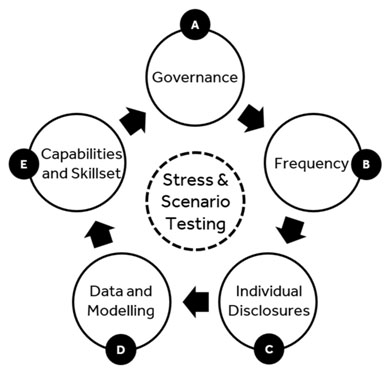

A More Regulatory Framework: What Experts Demand

The following are some of the major changes that have arisen out of the debate:

- AI‑Specific Stress Testing: Legislators wish for tests that examine how automated systems behave under extreme but realistic circumstances.

- Guidance on Consumer Protection: By the close of 2026, the FCA is supposed to release guidance on how current consumer legislation is applicable to automated decisions.

- Tougher Accountability Regulations: The Treasury Committee wants regulators to define how top managers ensure automation systems are secure and just.

- Third-Party Oversight: Technology providers may be considered “critical third parties,” giving regulators more power to regulate them and mitigate operational risk.

Key debate outcomes: AI stress tests, FCA consumer guidance, stricter accountability, and oversight of major tech providers. (Image Source: MDPI)

Industry Pushback: Why Regulators Are Sluggish

Other regulators and finance leaders believe in avoiding excessive new rules. The FCA’s open stance does not insist on much hard, prescriptive regulation because technology is fast-moving. Rather, it favours regulation that is more principles-based and focuses on results rather than certain technical requirements. It is a simple logic: too detailed rules may soon be outdated.

Real-Life Consequences: Things That Consumers Need To Monitor

Recent disclosures have indicated that two-thirds to three-quarters (66%–75%) of UK financial institutions are applying automated decision systems in core business processes, from lending to the claims process.

Examples of some of the consequences under consideration are:

- Computers are used to make hidden decisions that are not subject to easy challenge by customers.

- Finance is not available to vulnerable groups.

- Uncontrolled financial guidance from large language models, where consumers have no protection.

Global Perspective: The UK And the Fit With Other Jurisdictions

The discussion in the UK resembles the global discourse. European lawmakers desire specific AI rules in finance, while US regulators like the SEC desire more transparency and accountability of automated systems. Rules have to be balanced with innovation to retain the UK’s position as a leading financial centre.

The UK debate mirrors global trends: Europe seeks strict AI rules, the US demands transparency, and the UK must balance regulation with innovation to stay a top financial hub. (Image Source: Medium)

The Future of AI Regulation in Finance: Future Scenarios

With the debate underway, three possible avenues are likely:

- Scenario 1: Principles First: Regulators continue operating with existing regulations, plus additional guidance and stress testing. This facilitates innovation but may leave loopholes.

- Scenario 2: Specific Rules: Legislators design explicit, enforceable guidelines on automated decision-making. This provides clarity but less flexibility.

- Scenario 3: Hybrid Model: A combination of principles, stress tests, and specific protection for high-risk applications like credit, pricing, and trading.

Where This Leaves Consumers at This Point

To the average user, AI is non-existent. Trust depends on results. In case there is fairness in AI, there will be confidence. When it goes silent, it is easy to lose trust. The regulators are making attempts to intervene before that trust is shattered.

The Future of AI Regulation in the UK

The present here and now is definitive. The UK can:

- Enhance AI-specific financial supervision.

- Hold firms more accountable.

- Establish universal norms.

Making the Consumer Trust and Stability of the Market

This argument is all about trust. Is it possible to utilize effective automation in financial markets without losing trust? To the consumers, it implies transparent decision making, accessibility and accountability. In the case of markets, it implies robustness to shocks and escaping automated crowd behaviour.

Conclusion: Regulation That Keeps Up Pace

The AI and finance debate in the UK is already on. Whether through stress tests, new accountability models, or specific regulations, the destination is apparent: Regulation needs to be developed together with technology, not be behind it.

Frequently Asked Questions (FAQs)

- What is AI regulation in the financial services industry?

Ans: AI regulation in financial services refers to the rules, guidelines, and oversight mechanisms that ensure artificial intelligence systems used in banking, insurance, and finance operate safely, fairly, transparently, and in line with consumer protection laws. - What issue does the Bank of England have with AI adoption?

Ans: The Bank of England is concerned that widespread use of similar AI systems across financial institutions could introduce systemic risks, potentially undermining financial stability during periods of stress. - Can AI cause financial harm?

Ans: Yes. Poorly designed or biased AI systems can unfairly deny credit, misprice financial risk, contribute to market instability, or expose individuals and institutions to significant financial losses. - Is AI currently regulated in the UK?

Ans: Partially. Existing financial regulations apply to AI use, but there is no single, comprehensive AI-specific law that addresses all applications of artificial intelligence in financial services. - Will increased AI regulation reduce innovation?

Ans: Regulators aim to balance innovation with protection. The goal is not to slow technological progress, but to ensure AI is deployed responsibly without exposing consumers or markets to unnecessary risk. - What are financial AI stress tests?

Ans: Financial AI stress tests simulate extreme market conditions to assess how automated decision systems behave under pressure and whether they could fail or trigger broader market disruptions. - Why can’t existing financial rules fully address automated decision systems?

Ans: Current frameworks are broad and principles-based. They do not always account for the speed, opacity, and systemic impact of modern AI systems operating at scale. - What does accountability mean in the context of financial AI?

Ans: Accountability refers to clear responsibility within financial organisations to ensure automated systems are fair, explainable, compliant with regulations, and subject to effective oversight. - How can automated systems worsen market instability?

Ans: If multiple AI systems react in the same way to market stress, such as selling assets simultaneously, they can amplify volatility instead of stabilising markets.